- Text-to-Speech Gen AI Use Case: Enhancing Customer Experience with Dynamic Voice Interactions

Business Challenge/Problem Statement

- Inefficient Data Analysis: Manual transcription and analysis of voice data are slow, preventing timely insights into customer sentiment, operational inefficiencies, or market trends.

- Suboptimal Customer Service: Inability to quickly analyze customer calls for common issues, agent performance, or compliance risks leads to missed opportunities for service improvement and personalized support.

- Limited Accessibility and Searchability: Audio and video content without accurate transcripts are inaccessible to hearing-impaired individuals and difficult to search, hindering content discovery and utilization.

- Compliance and Regulatory Risks: In regulated industries, accurate and comprehensive records of voice communications are crucial for compliance, but manual processes or inaccurate STT can lead to gaps and risks.

- High Operational Costs: Relying on human transcribers for large volumes of audio data is expensive and does not scale efficiently with growing business needs.

Scope of Project

- High-Accuracy Transcription: Developing and training generative AI models to achieve state-of-the-art accuracy in transcribing spoken language, even in challenging conditions such as background noise, diverse accents, and rapid speech.

- Speaker Diarization: Implementing capabilities to accurately identify and separate individual speakers in a conversation, providing clear attribution for each transcribed segment.

- Natural Language Understanding (NLU) Integration: Integrating NLU capabilities to extract deeper meaning from transcribed text, including sentiment analysis, entity recognition, topic detection, and keyword extraction.

- Real-time and Batch Processing: Supporting both real-time transcription for live interactions (e.g., customer calls, virtual meetings) and efficient batch processing for large volumes of pre-recorded audio.

- Multi-language and Dialect Support: Expanding the system’s capabilities to accurately transcribe and understand multiple languages and regional dialects, ensuring global applicability.

- Customizable Acoustic and Language Models: Providing tools for clients to fine-tune acoustic models with their specific audio data and language models with industry-specific terminology, significantly improving accuracy for specialized use cases.

- API and SDK Development: Offering a comprehensive set of APIs and SDKs for seamless integration into existing enterprise applications, communication platforms, and data analytics tools.

- Scalability and Security: Designing the solution for high scalability to handle massive volumes of audio data and ensuring robust security measures to protect sensitive voice data and transcribed information.

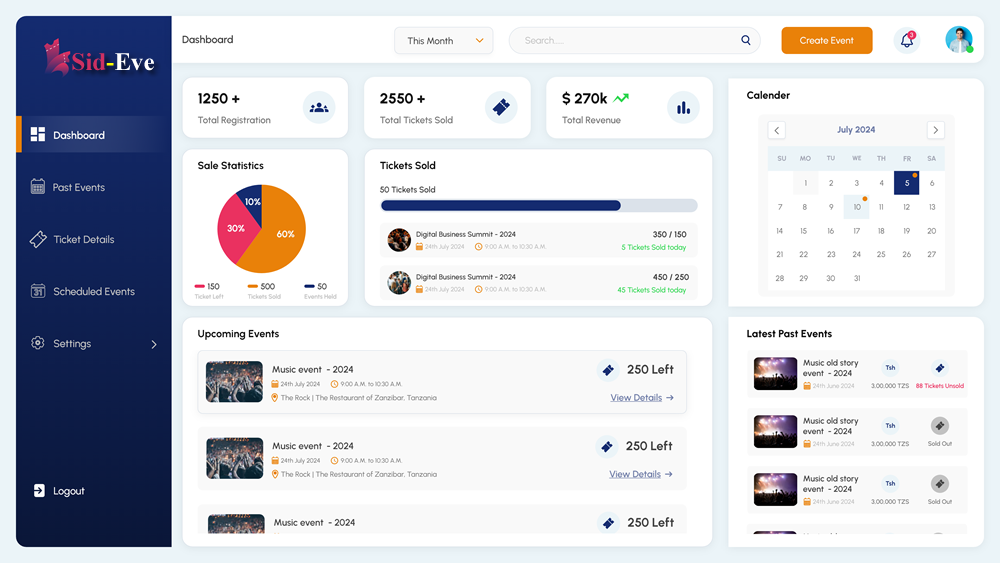

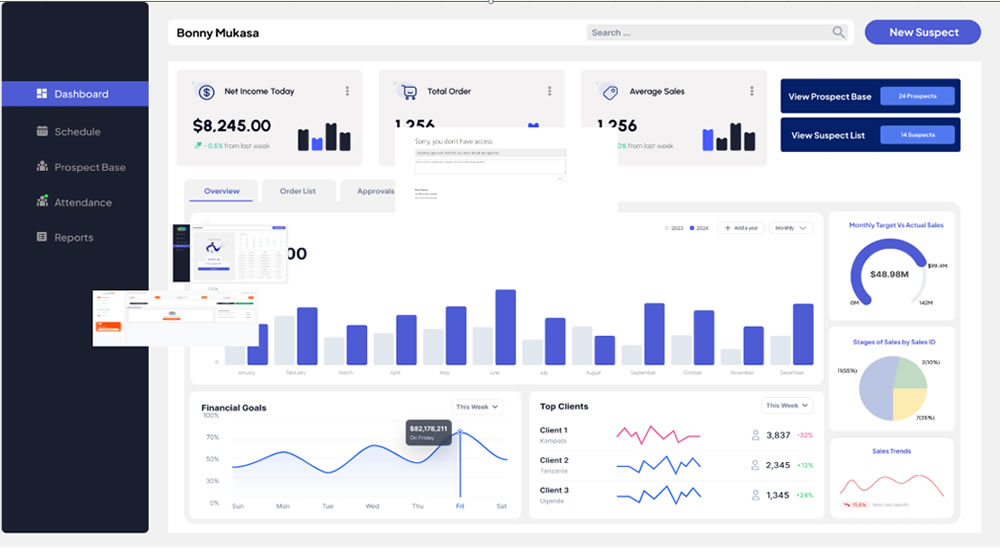

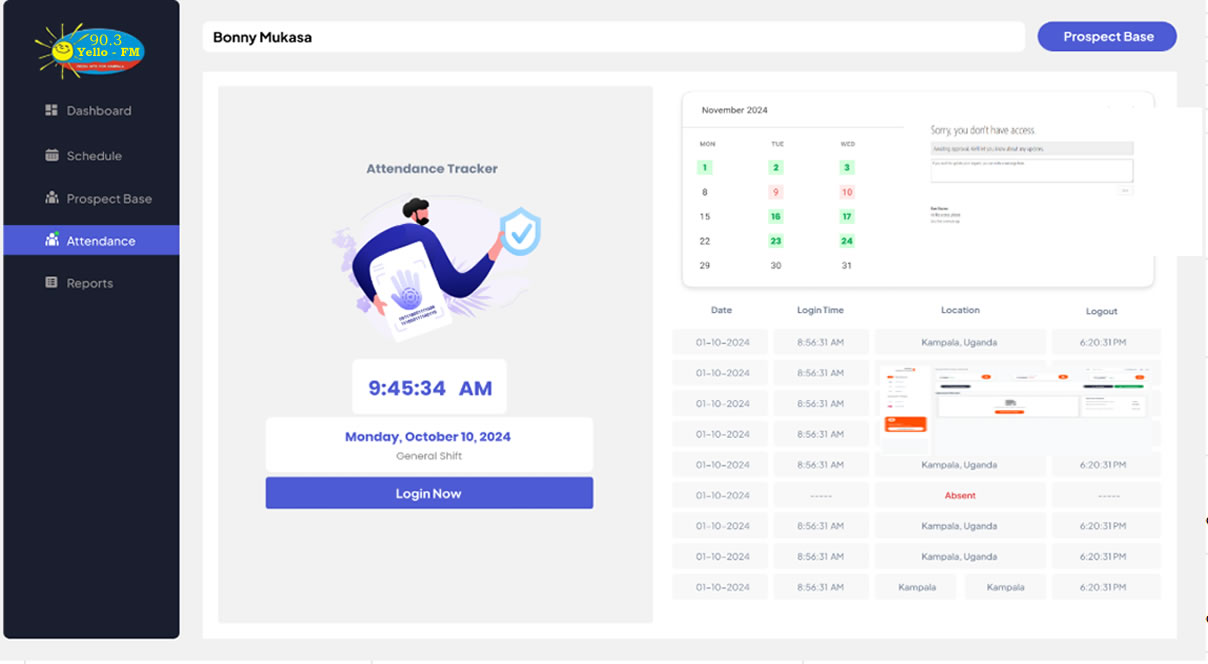

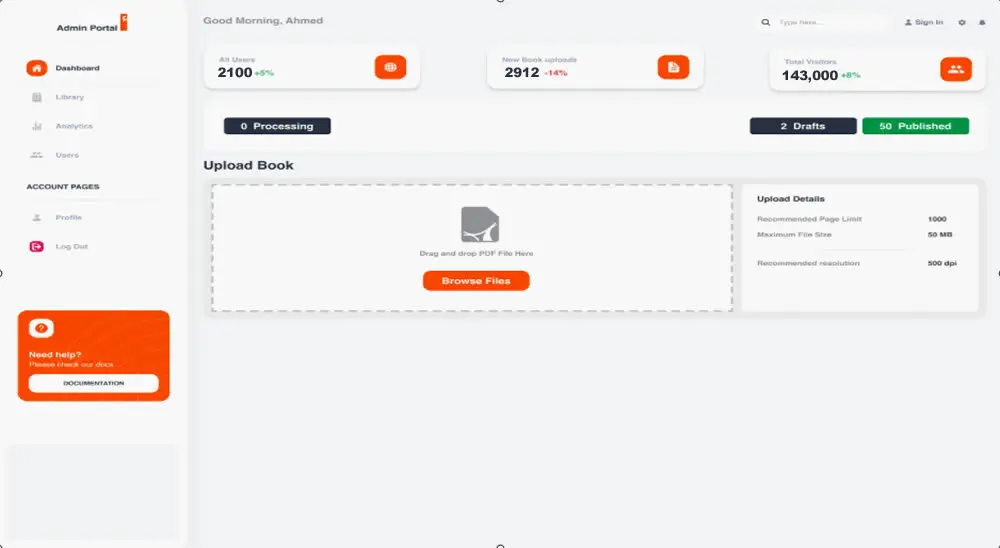

- User Interface for Management and Analytics: Developing an intuitive user interface for managing transcription jobs, reviewing transcripts, and visualizing extracted insights and analytics.

Solution we Provided

- Superior Transcription Accuracy: Leveraging cutting-edge deep learning models, our STT engine delivers industry-leading accuracy, even in challenging audio environments. It excels at transcribing diverse accents, handling overlapping speech, and filtering out background noise, ensuring reliable conversion of spoken words into text.

- Intelligent Speaker Diarization: Our solution precisely identifies and separates individual speakers within a conversation, providing clear attribution for each segment of the transcript. This is crucial for understanding conversational flow, analyzing individual contributions, and improving the readability of multi-party dialogues.

- Advanced Natural Language Understanding (NLU): Beyond mere transcription, our system integrates powerful NLU capabilities. It automatically performs sentiment analysis to gauge emotional tone, extracts key entities (e.g., names, dates, products), identifies prevalent topics, and highlights critical keywords. This transforms raw text into structured, searchable, and insightful data.

- Flexible Processing Modes: We offer both real-time STT for immediate applications like live call transcription, virtual assistant interactions, and meeting minutes, as well as high-throughput batch processing for large archives of pre-recorded audio. This flexibility caters to diverse operational needs and workflows.

- Extensive Language and Dialect Support:Our models are trained on vast datasets covering numerous languages and their regional dialects, ensuring comprehensive global coverage and accurate transcription for a diverse user base. This enables businesses to serve international markets effectively.

- Customizable Models for Enhanced Performance: Clients can significantly improve transcription accuracy for their specific domain by fine-tuning our acoustic models with their proprietary audio data and adapting language models with industry-specific jargon, product names, and acronyms. This customization ensures optimal performance for specialized use cases like medical dictation or legal proceedings.

- Developer-Friendly API and SDKs: Our solution provides a robust, well-documented API and comprehensive SDKs (Software Development Kits) for seamless integration into existing applications. This allows developers to easily embed STT capabilities into CRM systems, communication platforms, analytics dashboards, and custom business applications.

- Scalable, Secure, and Compliant Architecture: Built on a cloud-native, microservices architecture, our solution is designed for massive scalability, capable of processing petabytes of audio data. We adhere to stringent security protocols and compliance standards (e.g., GDPR, HIPAA) to protect sensitive voice data and ensure data privacy.

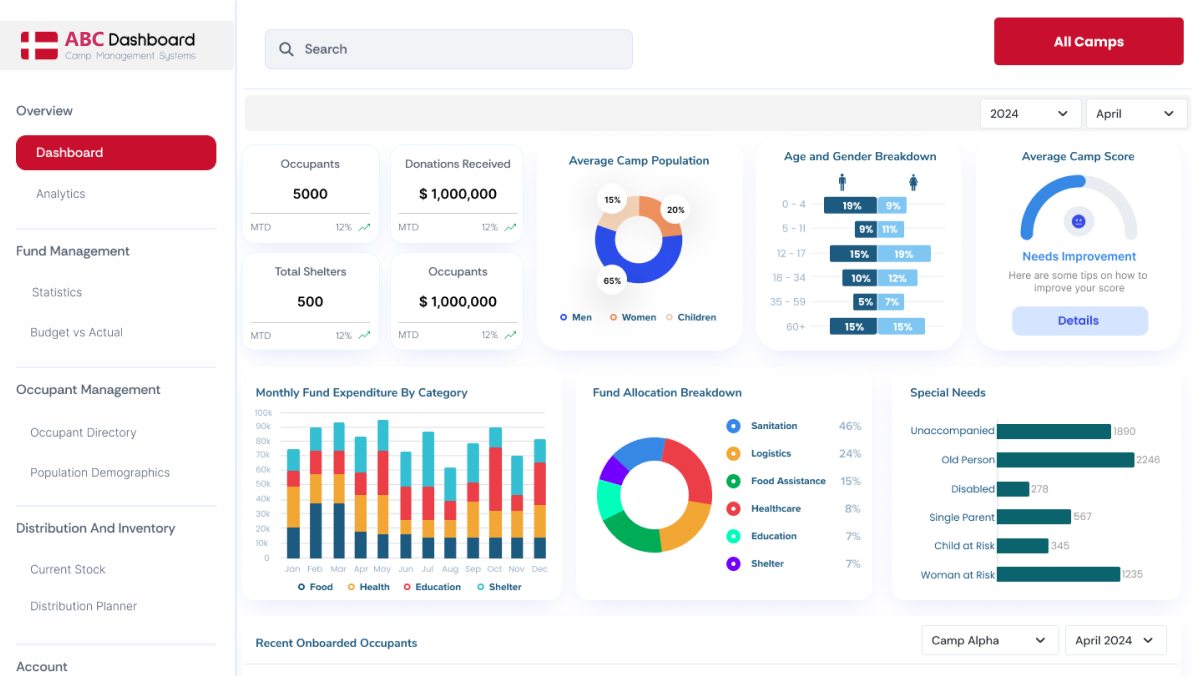

- Intuitive Analytics Dashboard: A user-friendly web interface provides tools for managing transcription jobs, reviewing and editing transcripts, and visualizing NLU-derived insights through interactive dashboards. This empowers users to quickly gain actionable intelligence from their voice data.

Technical Architecture

- Machine Learning Frameworks:

- TensorFlow/PyTorch: Utilized for building and training advanced deep neural networks, including recurrent neural networks (RNNs), convolutional neural networks (CNNs), and Transformer models, which are essential for high-accuracy acoustic modeling and language understanding in STT systems.

- Cloud Infrastructure:

- Google Cloud Platform (GCP)/Amazon Web Services (AWS)/Microsoft Azure: Leveraging cloud-agnostic principles, the solution can be deployed on leading cloud providers. This provides access to scalable compute resources (GPUs/TPUs), object storage (e.g., S3, GCS), and managed services for databases and message queues, ensuring high availability, global reach, and elastic scalability.

- Programming Languages:

- Python: The primary language for AI/ML development, data processing, and backend services, chosen for its rich ecosystem of libraries (e.g., NumPy, Pandas, Scikit-learn) and frameworks for machine learning.

- Go/Java (for High-Performance Microservices): Used for building high-performance, low-latency microservices and API gateways that handle real-time audio streaming, transcription requests, and data orchestration.

- Database and Storage:

- NoSQL Databases (e.g., Cassandra, DynamoDB): For storing large volumes of unstructured and semi-structured data, such as audio metadata, transcription logs, and NLU-extracted insights, offering high scalability and flexibility.

- Object Storage (e.g., AWS S3, Google Cloud Storage): For efficient and cost-effective storage of raw audio files, processed audio, and large datasets used for model training.

- Containerization and Orchestration:

- Docker: For packaging the STT application and its dependencies into lightweight, portable containers, ensuring consistent deployment across development, testing, and production environments.

- Kubernetes: For orchestrating containerized applications, automating deployment, scaling, and management of the STT services, ensuring high availability and fault tolerance.

- API Management and Communication:

- RESTful APIs/gRPC: Providing secure, high-performance interfaces for client applications to interact with the STT engine, supporting both synchronous and asynchronous communication patterns.

- Kafka/RabbitMQ: For building robust, scalable message queues to handle real-time audio streams and asynchronous processing of large audio batches.

- Version Control and CI/CD:

- Git/GitHub/GitLab: For collaborative development, version control, and managing code repositories.

- Jenkins/GitHub Actions/GitLab CI/CD: For automated testing, continuous integration, and continuous deployment pipelines, ensuring rapid and reliable delivery of updates and new features.

- Monitoring and Logging:

- Prometheus/Grafana: For real-time monitoring of system performance, resource utilization, and service health, providing dashboards for operational insights.

- ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging, analysis, and visualization of system logs, aiding in troubleshooting, performance optimization, and security auditing.

- Text-to-SQL Gen AI Use Case: Empowering Business Users with Natural Language Database Access

Business Challenge/Problem Statement

- Delayed Insights: The dependency on technical teams creates a backlog of data requests, delaying access to critical information and slowing down decision-making processes.

- Limited Self-Service Analytics: Business users are unable to independently explore data, ask follow-up questions, or conduct iterative analysis, hindering agile business intelligence.

- Increased Workload for Technical Teams: IT and data teams are overwhelmed with routine data extraction tasks, diverting their focus from more strategic initiatives like data infrastructure development or advanced analytics.

- Underutilized Data Assets: The inability of non-technical users to directly interact with databases means that valuable data often remains untapped, limiting its potential to drive business value.

- Miscommunication and Misinterpretation: Translating business questions into technical SQL queries can lead to misunderstandings, resulting in incorrect data retrieval or irrelevant insights.

There is a critical need for a solution that democratizes data access, allowing non-technical users to query databases using natural language, thereby empowering them to gain immediate insights and make data-driven decisions without relying on intermediaries.

Scope of Project

This project aims to develop and implement a generative AI-powered Text-to-SQL system that enables users to query relational databases using natural language. The scope includes:

- Natural Language Understanding (NLU) for Query Interpretation: Developing advanced NLU models capable of accurately interpreting complex natural language questions, understanding user intent, and identifying relevant entities and relationships within the database schema.

- SQL Query Generation: Building a robust generative AI engine that translates interpreted natural language queries into syntactically correct and semantically accurate SQL queries, optimized for various database systems (e.g., MySQL, PostgreSQL, SQL Server, Oracle).

- Schema Linking and Metadata Management: Implementing mechanisms to automatically understand and link natural language terms to the underlying database schema (tables, columns, relationships) and manage metadata effectively to improve query generation accuracy.

- Contextual Understanding and Conversation History: Incorporating capabilities to maintain conversational context, allowing users to ask follow-up questions and refine queries iteratively without re-stating the entire request.

- Error Handling and Feedback Mechanism: Designing a system that can identify ambiguous or unanswerable queries, provide intelligent feedback to the user, and suggest clarifications or alternative phrasing.

- Security and Access Control: Ensuring that the Text-to-SQL solution adheres to strict security protocols, including user authentication, authorization, and data access policies, to prevent unauthorized data exposure.

- Integration with Existing Data Infrastructure: Providing flexible APIs and connectors for seamless integration with various enterprise data sources, business intelligence tools, and data visualization platforms.

- Performance Optimization: Optimizing the query generation process for speed and efficiency, ensuring that insights are delivered in near real-time, even for complex queries on large datasets.

- User Interface Development: Creating an intuitive and user-friendly interface (e.g., web application, chatbot integration) that facilitates natural language interaction with the database and presents query results clearly.

Solution we Provided

- Intuitive Natural Language Interface: Users can simply type their questions in plain English (or other supported natural languages), just as they would ask a human data analyst. Our system leverages advanced Natural Language Understanding (NLU) to accurately interpret user intent, identify key entities, and understand the relationships between data points.

- Intelligent SQL Generation: At the core of our solution is a sophisticated generative AI engine that translates natural language queries into highly optimized and semantically correct SQL statements. This engine is trained on vast datasets of natural language questions and corresponding SQL queries, enabling it to handle complex joins, aggregations, filtering, and sorting operations across various database schemas.

- Dynamic Schema Understanding and Linking: Our system dynamically analyzes the database schema, including table names, column names, data types, and relationships. It intelligently links natural language terms to the appropriate database elements, even for non-standard naming conventions, ensuring accurate query generation without manual mapping.

- Contextual Awareness and Conversational Flow: The solution maintains conversational context, allowing users to ask follow-up questions and refine their queries iteratively. For example, a user can ask “Show me sales for Q1,” and then follow up with “Now show me by region” without re-specifying the initial query parameters.

- Robust Error Handling and User Guidance: In cases of ambiguous or incomplete queries, our system provides intelligent feedback, suggesting clarifications or alternative phrasing to guide the user towards a successful query. This proactive assistance minimizes frustration and improves the user experience.

- Enterprise-Grade Security and Access Control: We integrate seamlessly with existing enterprise security frameworks, ensuring that users can only access data for which they have authorized permissions. All generated SQL queries are validated against predefined security policies before execution, safeguarding sensitive information.

- Seamless Integration and Extensibility: Our solution offers flexible APIs and connectors, allowing for easy integration with existing data ecosystems, including business intelligence dashboards, data visualization tools, enterprise applications, and popular chat platforms. This ensures that data insights are accessible where and when they are needed.

- High Performance and Scalability: Designed for enterprise environments, our Text-to-SQL engine is optimized for speed and efficiency, capable of generating and executing complex queries on large datasets in near real-time. Its scalable architecture can handle a growing number of users and increasing data volumes without compromising performance.

- Auditability and Transparency: For compliance and debugging purposes, our system provides full audit trails of natural language queries, generated SQL, and query results, ensuring transparency and accountability in data access.

Technology Enviornment

Our generative AI Text-to-SQL solution is built upon a robust and scalable technology stack, designed for high performance, accuracy, and seamless integration into diverse enterprise data environments. The core components and technologies include:

- Machine Learning Frameworks:

- TensorFlow/PyTorch: Utilized for building and training advanced deep learning models, particularly large language models (LLMs) and transformer-based architectures, which are fundamental for Natural Language Understanding (NLU) and SQL generation.

- Cloud Infrastructure:

- Google Cloud Platform (GCP)/Amazon Web Services (AWS)/Microsoft Azure: Leveraging cloud-agnostic principles, the solution can be deployed on leading cloud providers. This provides access to scalable compute resources (GPUs/TPUs), managed database services, and object storage, ensuring high availability, global reach, and elastic scalability.

- Programming Languages:

- Python: The primary language for AI/ML development, NLU processing, and backend services, chosen for its extensive libraries (e.g., Hugging Face Transformers, SpaCy) and frameworks for machine learning and data manipulation.

- Java/Go (for High-Performance API and Data Connectors): Used for building high-performance, low-latency API gateways and data connectors that interact with various database systems and orchestrate query execution.

- Database Connectivity and Management:

- SQLAlchemy/JDBC/ODBC: For establishing secure and efficient connections to a wide range of relational database management systems (RDBMS) like MySQL, PostgreSQL, SQL Server, Oracle, and Snowflake.

- Metadata Stores (e.g., Apache Atlas, Custom Solutions): For managing and storing database schema information, table and column descriptions, and data lineage, crucial for accurate schema linking and contextual understanding.

- Containerization and Orchestration:

- Docker: For packaging the Text-to-SQL application and its dependencies into portable containers, ensuring consistent deployment across different environments.

- Kubernetes: For orchestrating containerized applications, automating deployment, scaling, and management of the Text-to-SQL services, ensuring high availability and fault tolerance.

- API Management and Communication:

- RESTful APIs/gRPC: Providing secure, high-performance interfaces for client applications to submit natural language queries and receive SQL results and data insights.

- Message Queues (e.g., Apache Kafka, RabbitMQ): For asynchronous processing of complex queries, managing query queues, and enabling real-time data streaming for analytics.

- Version Control and CI/CD:

- Git/GitHub/GitLab: For collaborative development, version control, and managing code repositories.

- Jenkins/GitHub Actions/GitLab CI/CD: For automated testing, continuous integration, and continuous deployment pipelines, ensuring rapid and reliable delivery of updates and new features.

- Monitoring and Logging:

- Prometheus/Grafana: For real-time monitoring of system performance, query execution times, and resource utilization.

- ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging, analysis, and visualization of system logs, aiding in troubleshooting, performance optimization, and security auditing.

This robust technology environment ensures that our generative AI Text-to-SQL solution is not only powerful and accurate but also highly scalable, secure, and easily maintainable, capable of meeting the demanding requirements of various enterprise data analytics needs.

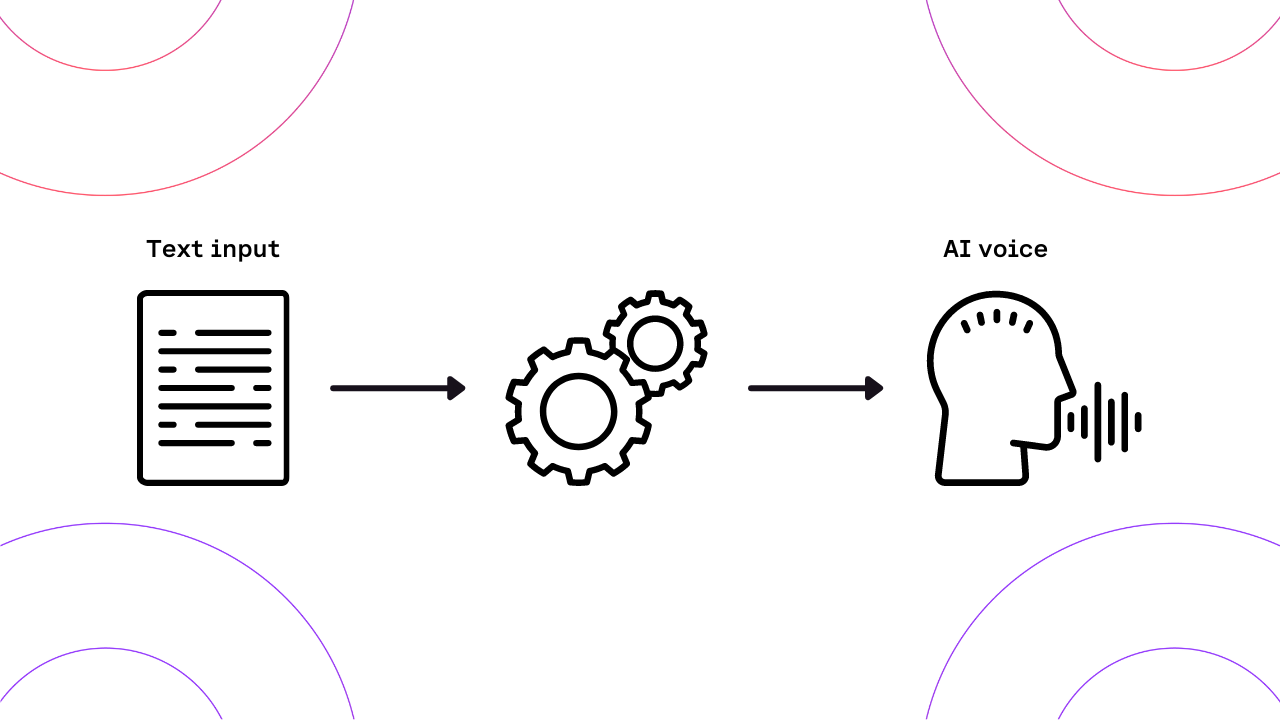

- Text-to-Speech Gen AI Use Case: Enhancing Customer Experience with Dynamic Voice Interactions

Business Challenge/Problem Statement

Traditional text-to-speech (TTS) solutions often suffer from robotic, unnatural-sounding voices, lacking the intonation, emotion, and nuance required for engaging human-like interactions. This limitation significantly impacts customer experience in various sectors, including customer support, e-learning, content creation, and accessibility services. Businesses struggle to deliver personalized and empathetic voice interactions at scale, leading to:

- Poor Customer Engagement: Monotonous voices can disengage customers, leading to frustration and reduced satisfaction in automated systems.

- Limited Brand Representation: Brands find it challenging to convey their unique tone and personality through generic, synthetic voices.

- Inefficient Content Production: Creating high-quality audio content for e-learning modules, audiobooks, or marketing materials is often time-consuming and expensive, requiring professional voice actors.

- Accessibility Barriers: While TTS aids accessibility, unnatural voices can still pose comprehension challenges for users with cognitive disabilities or those who rely heavily on auditory information.

There is a clear need for a next-generation TTS solution that leverages generative AI to produce highly natural, emotionally intelligent, and customizable voices, capable of transforming digital interactions into rich, human-like experiences.

Scope of The Project

This project aims to develop and implement an advanced text-to-speech (TTS) system powered by generative AI, specifically designed to overcome the limitations of traditional TTS. The scope includes:

- Development of a Custom Voice Model: Training a generative AI model on a diverse dataset of human speech to create a highly natural and expressive voice. This model will be capable of generating speech with appropriate intonation, rhythm, and emotional nuances.

- Emotion and Tone Recognition: Integrating capabilities to detect and interpret emotional cues from input text, allowing the TTS system to generate speech that matches the intended sentiment (e.g., happy, sad, urgent).

- Multi-language and Accent Support: Expanding the system’s capabilities to support multiple languages and regional accents, ensuring global applicability and localized user experiences.

- API Integration: Providing a robust and easy-to-integrate API for seamless adoption across various platforms and applications, including customer service chatbots, virtual assistants, e-learning platforms, and content management systems.

- Scalability and Performance Optimization: Ensuring the solution is highly scalable to handle large volumes of text-to-speech conversions in real-time, with optimized performance for low-latency applications.

- User Customization: Allowing users to fine-tune voice parameters such as pitch, speaking rate, and emphasis, and potentially create unique brand voices.

- Ethical AI Considerations: Implementing safeguards to prevent misuse and ensure responsible deployment of the generative AI TTS technology, including addressing concerns around deepfakes and voice cloning.

Solution We Provided

Our generative AI-powered text-to-speech solution addresses the identified challenges by offering a sophisticated platform that transforms text into highly natural and emotionally rich spoken audio. Key features of our solution include:

- Human-like Voice Synthesis: Leveraging advanced neural networks and deep learning models, our system generates speech that closely mimics human intonation, rhythm, and pronunciation, significantly reducing the ‘robotic’ sound often associated with traditional TTS.

- Emotional Intelligence: The solution incorporates a sophisticated emotion recognition engine that analyzes the sentiment of the input text. This allows the AI to dynamically adjust the voice’s tone, pitch, and speaking style to convey appropriate emotions, such as empathy, excitement, or urgency, making interactions more engaging and relatable.

- Voice Customization and Branding: Clients can choose from a diverse library of pre-trained voices or work with us to create a unique brand voice. This includes fine-tuning parameters like accent, gender, age, and speaking pace, ensuring consistency with brand identity across all voice interactions.

- Multi-lingual and Multi-accent Support: Our solution supports a wide range of languages and regional accents, enabling businesses to cater to a global audience with localized and culturally appropriate voice content. This is crucial for international customer support, e-learning, and content distribution.

- Real-time Processing and Scalability: Engineered for high performance, the system can convert large volumes of text to speech in real-time, making it suitable for dynamic applications like live customer service calls, interactive voice response (IVR) systems, and real-time content generation. Its scalable architecture ensures consistent performance even during peak demand.

- Seamless API Integration: We provide a well-documented and easy-to-use API that allows for straightforward integration into existing applications and workflows. This includes web applications, mobile apps, content management systems, and enterprise software, minimizing development overhead for clients.

- Content Creation Efficiency: By automating the voiceover process with high-quality, natural-sounding voices, our solution drastically reduces the time and cost associated with producing audio content for e-learning modules, audiobooks, podcasts, marketing campaigns, and accessibility features.

- Ethical and Responsible AI: We prioritize ethical AI development, implementing robust measures to prevent misuse of voice synthesis technology. This includes watermarking generated audio and providing tools for content authentication, addressing concerns related to deepfakes and ensuring responsible deployment.

Technical Architecture

- Machine Learning Frameworks:

- TensorFlow/PyTorch: Utilized for building and training deep neural networks, particularly for advanced generative models like WaveNet, Tacotron, and Transformer-based architectures, which are fundamental to natural-sounding speech synthesis.

- Cloud Infrastructure:

- Google Cloud Platform (GCP)/Amazon Web Services (AWS)/Microsoft Azure: Leveraging cloud-agnostic principles, the solution can be deployed on leading cloud providers for scalable compute resources (GPUs/TPUs), storage, and managed services. This ensures high availability, global reach, and elastic scalability to handle varying workloads.

- Programming Languages:

- Python: The primary language for AI/ML development, data processing, and API backend services, due to its extensive libraries and frameworks for machine learning.

- Node.js/Go (for API Gateway/Microservices): Used for building high-performance, low-latency API gateways and microservices that handle requests and orchestrate interactions between different components of the TTS system.

- Database and Storage:

- NoSQL Databases (e.g., MongoDB, Cassandra): For storing large volumes of unstructured data, such as audio samples, voice models, and metadata, offering flexibility and scalability.

- Object Storage (e.g., Google Cloud Storage, AWS S3): For efficient and cost-effective storage of large audio datasets and generated speech files.

- Containerization and Orchestration:

- Docker: For packaging applications and their dependencies into portable containers, ensuring consistent deployment across different environments.

- Kubernetes: For orchestrating containerized applications, managing deployments, scaling, and ensuring high availability of the TTS services.

- API Management:

- RESTful APIs/gRPC: Providing well-defined interfaces for seamless integration with client applications, ensuring secure and efficient communication.

- Version Control and CI/CD:

- Git/GitHub/GitLab: For collaborative development, version control, and managing code repositories.

- Jenkins/GitHub Actions/GitLab CI/CD: For automated testing, building, and deployment pipelines, ensuring rapid and reliable delivery of updates and new features.

- Monitoring and Logging:

- Prometheus/Grafana: For real-time monitoring of system performance, resource utilization, and service health.

- ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging, analysis, and visualization of system logs, aiding in troubleshooting and performance optimization.

- Autonomous Agentic AI for Scientific Discovery

The Challenge

Traditional scientific research and drug discovery processes are slow, resource-intensive, and prone to human bias. Researchers often face bottlenecks due to:

- Manual literature review of vast scientific databases.

- Cognitive bias that limits exploration of unconventional hypotheses.

- High time and cost associated with hypothesis testing and simulations.

- Lack of scalability, as human researchers cannot operate continuously at large scale.

The goal was to design an autonomous, intelligent agent system capable of managing the entire R&D lifecycle—from hypothesis generation to experiment design, analysis, and recommendation—dramatically accelerating innovation.

Scope of Project

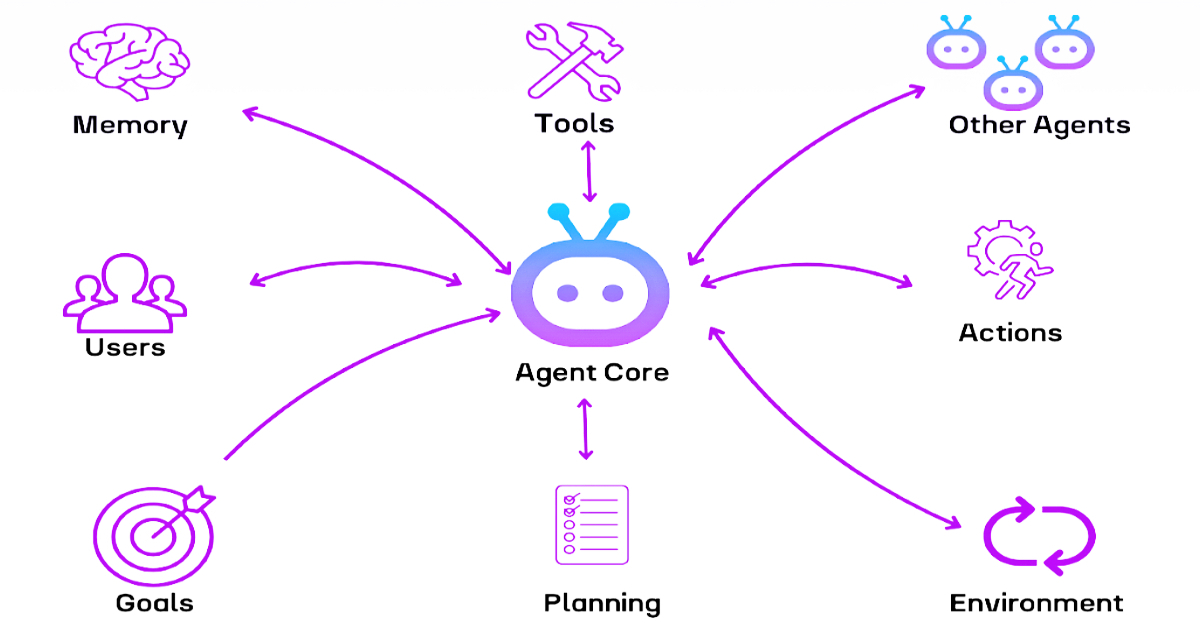

Solution: Agentic AI Workflow

- Task Decomposition & Planning (Project Manager Agent)

- Interprets high-level research goals and breaks them into structured tasks.

- Plans workflows covering literature review, hypothesis generation, simulations, and result analysis.

-

Information Gathering & Synthesis (Research Assistant Agent)

- Crawls and queries scientific databases (PubMed, arXiv, patents).

- Summarizes findings and compiles a state-of-the-art knowledge base.

-

Hypothesis Generation & Experiment Design (Scientist Agent)

- Formulates testable hypotheses.

- Designs in-silico experiments, writing and executing simulation code on cloud-based HPC infrastructure.

- Analysis, Learning, & Iteration (Lead Analyst Agent)

- Analyzes simulation results and identifies promising candidates.

- Employs agentic reasoning to refine hypotheses, optimize parameters, and rerun experiments.

-

Reporting & Recommendation (Communicator Agent)

- Generates a comprehensive scientific report detailing methods, findings, and confidence scores.

- Provides actionable insights to human researchers for lab validation.

The deployment of an Agentic AI-driven R&D platform revolutionized scientific discovery workflows. By automating the entire research pipeline—data collection, hypothesis generation, simulation, and analysis—the solution significantly accelerated drug discovery and innovation while reducing cost and human error.

Business Impact

Impact Area | Results Achieved |

Time-to-Discovery | Reduced early-stage R&D timelines from years to weeks or days, accelerating go-to-market strategy. |

Cost Optimization | Decreased manual research and simulation costs by 40–60% through automation. |

Exploration of Novel Solutions | Identified non-obvious, high-potential compounds by overcoming human cognitive bias. |

Scalability | Enabled 24/7 autonomous research, continuously iterating on hypotheses without downtime. |

Reproducibility & Transparency | Maintained a fully documented digital record of every research step for auditing and replication. |

Innovation Enablement | Provided scientists with validated, AI-driven recommendations, freeing them to focus on creative problem-solving. |

Technical Architecture

- Core AI Techniques: Multi-agent systems, agentic reasoning, natural language processing, reinforcement learning.

- Scientific Tools: Protein structure prediction models (e.g., AlphaFold 3), molecular docking simulations.

- Infrastructure: Cloud-based HPC for large-scale simulations and analytics.

- Case Study – End-to-End Automated Supply Chain Resolution Agent

The Challenge

Global supply chains are increasingly complex and vulnerable to disruptions caused by weather events, geopolitical tensions, port congestion, transportation delays, and supplier issues. Traditional supply chain management systems are often reactive, providing alerts but requiring human intervention for problem-solving. This leads to:

- High downtime costs due to delayed shipments or factory shutdowns.

- Siloed decision-making without optimization across the entire supply chain.

- Slow response times, resulting in lost revenue and reduced customer satisfaction.

- Limited scalability as human teams cannot handle disruptions at global scale in real-time.

The goal was to build an autonomous AI-driven system capable of diagnosing, planning, and resolving supply chain disruptions end-to-end without human intervention, ensuring resilience and agility.

Scope of Project

To create an Agentic AI solution that acts as a digital supply chain manager, autonomously monitoring shipments, evaluating contingency plans, and executing resolutions in real time—while proactively communicating with all stakeholders.

sOLUTION

To create an Agentic AI solution that acts as a digital supply chain manager, autonomously monitoring shipments, evaluating contingency plans, and executing resolutions in real time—while proactively communicating with all stakeholders.

Solution: Agentic AI Supply Chain Workflow

- Continuous Monitoring & Diagnosis (Sentinel Agent)

- Constantly monitors IoT sensor data, weather forecasts, port congestion databases, ERP inventory levels, and shipping carrier updates.

- Detects disruptions (e.g., delayed shipments, material shortages, or unexpected factory downtimes).

- Strategic Planning & Evaluation (Strategist Agent)

- Generates multiple response scenarios (rerouting shipments, sourcing alternatives, rescheduling production).

- Evaluates solutions against cost, time-to-resolution, production schedules, and downstream customer impact.

- Autonomous Execution & Negotiation (Executor Agent)

- Executes the chosen plan autonomously:

- Places purchase orders with alternate suppliers.

- Reroutes shipments via logistics carriers.

- Updates ERP and production systems in real time.

- Executes the chosen plan autonomously:

- Proactive Communication & Stakeholder Management (Coordinator Agent)Notifies

- relevant teams with clear, actionable updates:

- Factory managers receive updated production timelines.

- Procurement teams receive cost approvals.

- Customers receive proactive delivery updates.

- relevant teams with clear, actionable updates:

Business Impact

The Automated Supply Chain Resolution Agent transformed traditional supply chain operations from reactive firefighting to proactive resilience management. By autonomously detecting disruptions, strategizing contingency plans, and executing actions, it empowered enterprises to maintain uninterrupted production, reduce losses, and enhance customer confidence.

Impact Area | Results Achieved |

Disruption Resolution Speed | Reduced time to resolve disruptions from days to minutes or hours, ensuring uninterrupted production. |

Cost Optimization | Lowered operational losses by 40–50% through proactive adjustments and risk-based decision-making. |

Production Continuity | Eliminated costly downtime, safeguarding millions in potential revenue loss per incident. |

Customer Satisfaction | Improved on-time delivery rates by 30–40%, driving better customer trust and retention. |

Scalability | Managed thousands of shipments and events simultaneously without increasing workforce. |

Continuous Improvement | Learned from historical disruptions, improving response quality over time. |

Technical Architecture

- AI Capabilities: Agentic reasoning, reinforcement learning, NLP-driven communication.

- Data Integration: IoT sensors, weather APIs, logistics platforms, ERP systems.

- Automation Layer: Robotic process automation (RPA) and API-based system integrations.

- Case Study: : Cognitive AI for Intelligent and Empathetic Customer Support

Industry : Customer Service & Technology

Challanges

- Traditional chatbots lacked emotional intelligence and context awareness, leading to frustrating user experiences.

- Resolving complex, multi-step technical issues required frequent escalation to human agents, slowing resolution times.

- High operational costs due to the need for large customer support teams to handle intricate cases.

- Lack of proactive issue detection, resulting in delayed responses to widespread problems.

- Disconnected customer experience due to limited memory of prior interactions, causing repetitive queries and reduced satisfaction.

Scope of The Project

- Deploy Cognitive AI agents capable of understanding customer intent, context, and emotion.

- Enable multi-turn, stateful conversations for resolving complex customer issues end-to-end without human intervention.

- Automate diagnostic workflows, software updates, and issue resolution through agentic reasoning.

- Continuously improve system intelligence by learning from every customer interaction.

- Build a proactive support system to detect and mitigate issues before customers escalate them.

Solution We Provided

- Implemented a Cognitive AI-powered customer support platform with the following key features:

- Advanced NLP & Emotional Intelligence: Ability to detect urgency, frustration, and intent, allowing AI agents to respond empathetically.

- Agentic Reasoning Module: AI autonomously diagnoses technical issues, executes multi-step solutions (e.g., firmware updates), and validates resolutions.

- Stateful Contextual Memory: Retains conversation history and past interactions, ensuring smooth and personalized responses.

- Continuous Learning: AI improves its knowledge base in real-time, optimizing performance and reducing repetitive issues.

- Proactive Issue Detection: The system identifies patterns in isolated cases and alerts teams about potential large-scale problems

Business Impact

- Enhanced Customer Experience:

- Provided 24/7 empathetic and hyper-personalized support, leading to a significant rise in CSAT (Customer Satisfaction) scores and brand loyalty.

- Automated 30-50% of complex support tickets, reducing dependency on human agents and cutting operational costs.

- Reduced response and resolution times significantly, improving SLAs and enabling faster troubleshooting.

- Handled growing customer volumes without increasing headcount, making support operations future-ready.

- Early detection of widespread technical issues prevented high call volumes and improved brand trust.

Technology Stack

- Natural Language Processing (NLP): Advanced parsing for sentiment and intent detection.

- Machine Learning & Cognitive AI Models: For reasoning, continuous learning, and decision-making.

- Agentic AI Frameworks: Enables autonomous execution of workflows and diagnostics.

- Cloud Infrastructure: For scalable and secure AI deployment.

- Integration Layer: APIs for connecting customer support platforms, CRM systems, and IoT device diagnostics. .

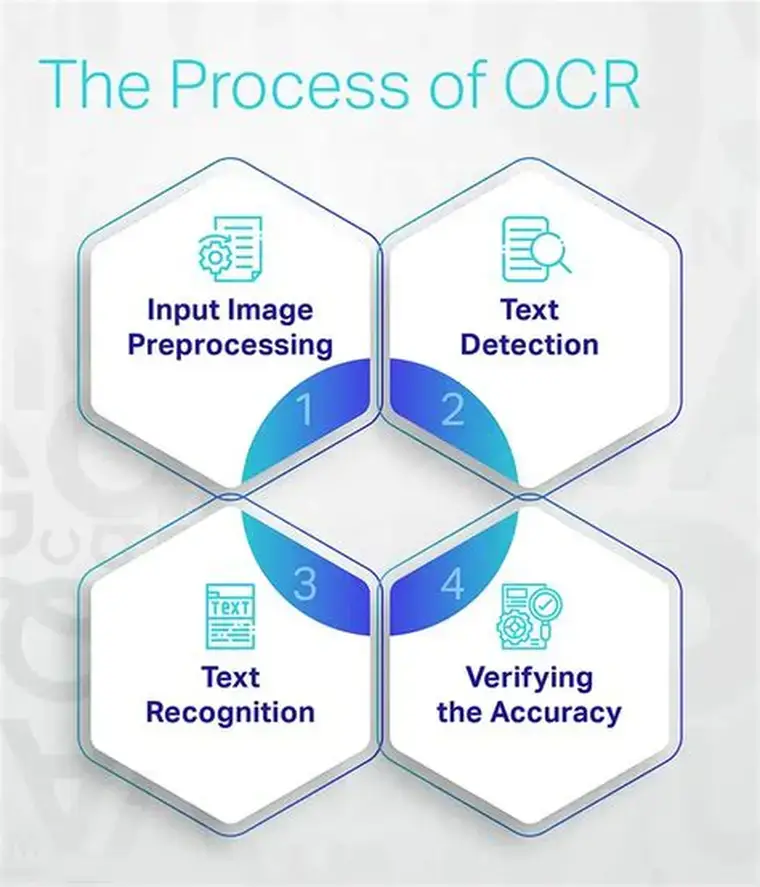

- Automating Invoice Processing with OCR

Industry

Challenges

Document Variability: Invoices from hundreds of vendors had inconsistent layouts and terminologies, making automation difficult.

Low-Quality Scans & Images: Poor-quality scanned invoices required preprocessing to achieve high OCR accuracy.

- Handwriting Recognition: Occasional handwritten fields needed additional verification workflows.

- Integration Complexity: Synchronizing extracted data with multiple ERP and procurement platforms was challenging.

- Change Management: Finance teams needed training to adapt to the automated process.

Scope of The Project

- Standardize invoice processing for multiple vendors.

- Integrate automation with ERP and accounting systems.

- Reduce processing time, errors, and costs while improving cash flow visibility.

Business Impact

| KPI | Before OCR Implementation | After OCR Implementation | Improvement |

| Processing Time per Invoice | 5-15 mins | < 30 seconds | ~90% faster |

| Error Rate | 15-20% | < 2% | ~88% reduction |

| Straight-Through Processing | <10% | 60-80% | Significant |

| Cost per Invoice | High (manual labor) | 50-70% lower | Major savings |

| Annual Labor Hours Saved | N/A | ~2,500 hours (for 50,000 invoices/year) | Operational efficiency boost |

- Faster Payment Cycles led to early payment discounts and improved supplier relationships.

- Cash Flow Predictability improved due to real-time invoice visibility.

- Finance Teams could focus on strategic work rather than data entry.

Technology Environment

Technology | Purpose |

OCR Engines (Tesseract, Roboflow OCR API) | Core text recognition |

AI Models (LayoutLMv3, GPT-4o) | Contextual understanding, multimodal field detection |

Computer Vision Object Detection | Field and table localization |

ERP/Accounting Systems (SAP, Oracle, QuickBooks, etc.) | Data integration |

APIs & Middleware | Automated workflows and validation |

Preprocessing Tools (OpenCV) | Image enhancement and quality improvement |

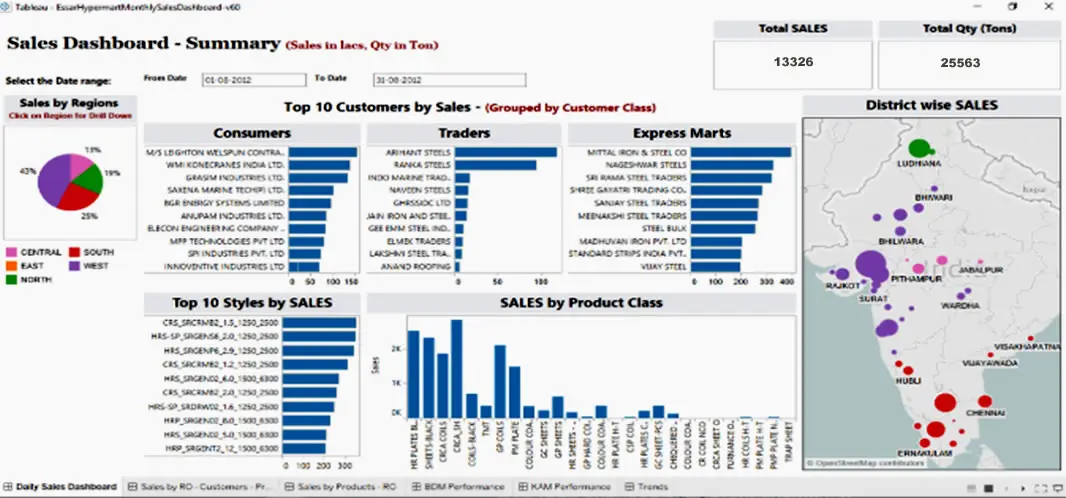

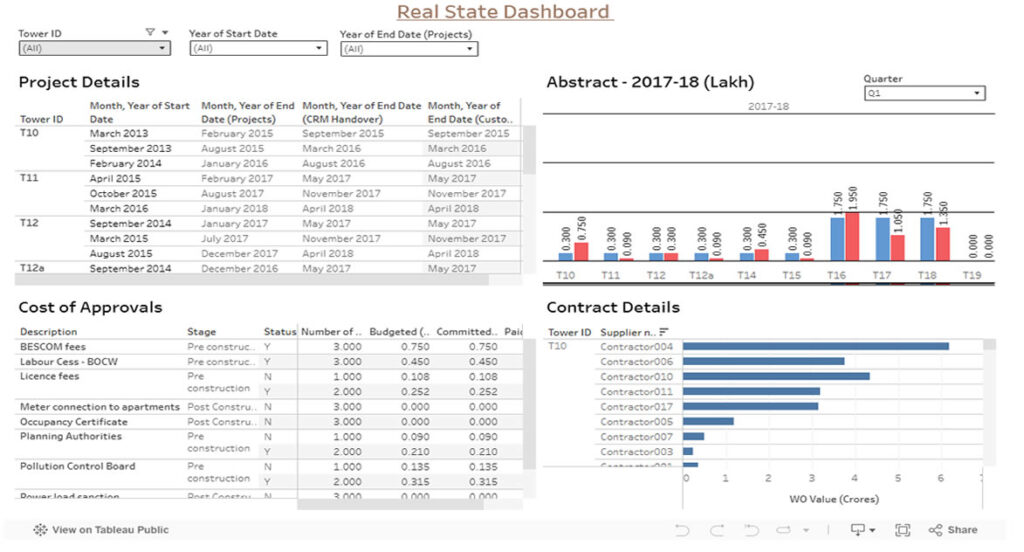

- Dashboard-realtime insights

Industry

Challanges

Scope of the Project

- Migrating reporting from Excel to Tableau using the Infinity data source.

- Building a Master Production Schedule (MPS) Dashboard to monitor inventory and supply chain metrics.

- Offering historical, summary, and Weeks of Supply (WOS) views at multiple hierarchy levels (item, location, and region).

- Ensuring data integrity and real-time updates to improve decision-making.

Solution Provided

- Data Preparation and Processing:

Raw data from multiple systems was integrated through the Infinity platform and processed using Alteryx. Existing macros were enhanced with new formulas to clean, transform, and standardize the data at item, location, and region levels. This created a reliable foundation for accurate reporting. - Master Production Schedule (MPS) Output:

The processed data was used to generate a detailed MPS output, which included metrics such as Weeks of Supply (WOS), backorders, calculated on-hand inventory, deploy-in/out movements, plan orders, and safety stock levels. - Interactive Tableau Dashboards:

The MPS output was visualized in Tableau, with dashboards designed to provide historical views, summary reports, and WOS analysis. Drill-down capabilities allowed users to analyze performance at granular levels, such as by warehouse, product line, or region. - Automation and Real-Time Insights:

Automated workflows in Alteryx and Tableau ensured weekly updates with minimal manual intervention. This provided near real-time visibility into stock levels, demand, and supply chain risks. - Actionable Analytics:

The dashboards enabled supply chain teams to quickly identify inefficiencies, manage stock movements, and balance inventory across locations. Early warnings for potential stockouts or overstock situations allowed timely corrective actions.

Business Impact

- Improved Efficiency: Automated updates significantly reduced manual effort, freeing teams to focus on strategic planning rather than data preparation.

- Real-Time Visibility: Managers could monitor inventory, backorders, and WOS across all locations with up-to-date insights.

- Risk Reduction: Proactive identification of supply-demand imbalances minimized the risk of stockouts and excess inventory.

- Faster Decision-Making: Interactive dashboards and drill-down analysis enabled leaders to act quickly and confidently.

- Scalability: The solution established a foundation that could easily adapt to growing data volumes and additional KPIs.

Technical Architecture

- Data source: Infinity (inventory and supply chain systems).

- Data Processing & ETL: Alteryx (data cleaning, transformation, aggregation, macros, and formula enhancements)

- Visualization & Reporting: Tableau (MPS dashboards, historical views, summary views, WOS analysis)

- Automation: Scheduled workflows and automated data refreshes in Alteryx and Tableau

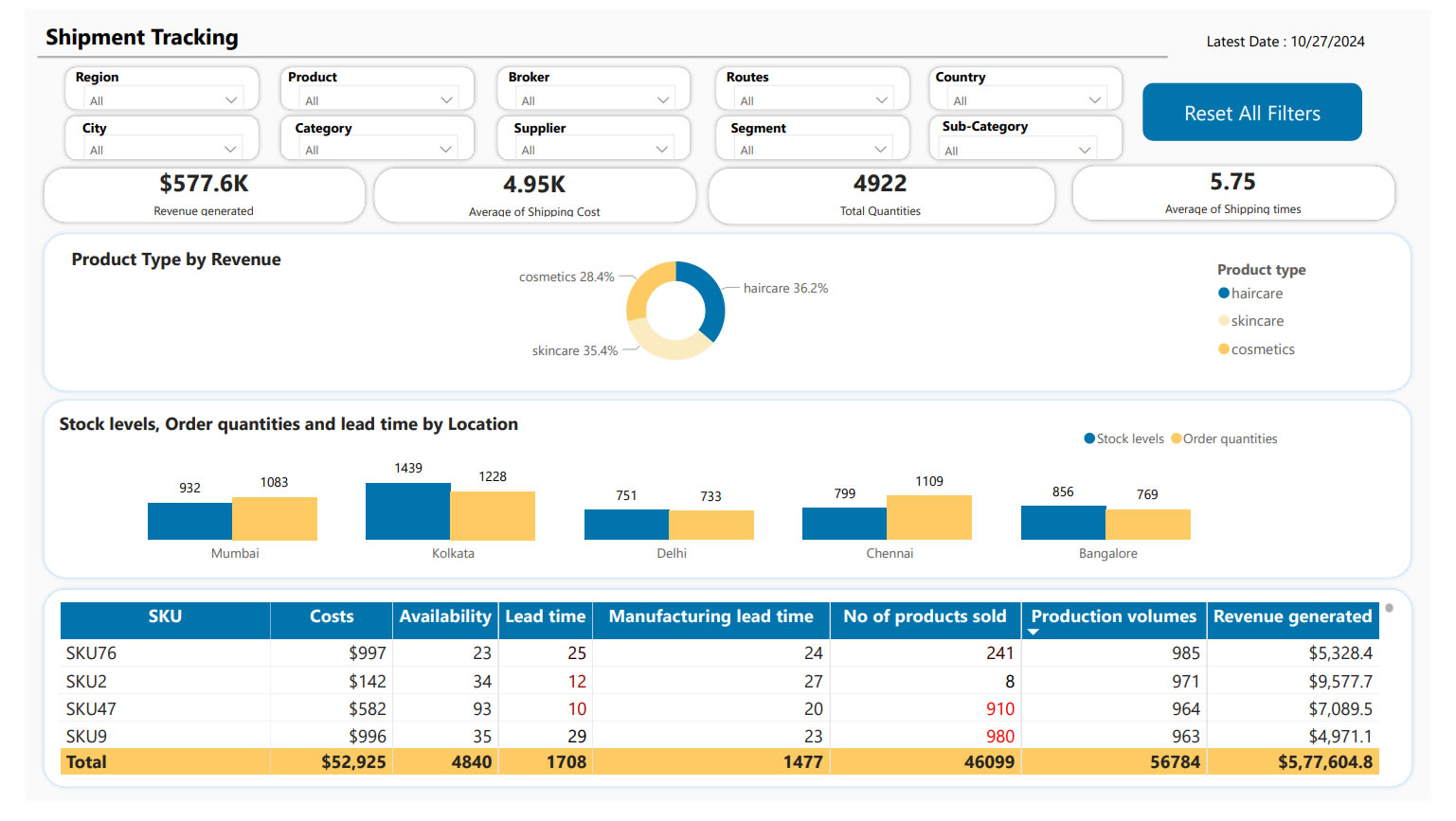

- Shipment Tracking

Challanges

Key challenges included:

- Stockouts of fast-moving products, resulting in missed sales opportunities.

- Overstocking of slow-moving items, leading to high storage costs and waste.

- Supplier delays and logistics bottlenecks, which increased shipping costs and eroded customer satisfaction.

- High defect rates, damaging brand reputation.

- Fragmented and manual processes, with reliance on Tableau server exports, Excel mapping, and pivot table analysis—limiting the ability to scale, automate, and provide timely insights.

- Poor demand forecasting caused by inconsistent data, delaying critical business decisions.

Scope of Project

- Consolidating shipment data from multiple sources into a single, automated reporting system.

- Providing visibility into order fulfillment, supplier performance, delivery timelines, and product movement.

- Implementing automated alerts for stockouts and overstock conditions.

- Empowering decision-makers with real-time, drill-down insights into product sales trends, partner performance, and logistics efficiency.

Solution Provided

A comprehensive Shipment Tracking Dashboard was developed in Power BI, supported by an automated ETL and data integration pipeline.

- Data Integration & Automation

Data was ingested from multiple sources, including Nielsen, SharePoint, and Excel files. Alteryx and Python scripts were used to automate extraction, transformation, and loading (ETL) processes. A dedicated database (Snowflake) was created to store cleaned and structured shipment data, removing dependency on manual Excel exports. - Data Preparation & Transformation

Using Alteryx workflows, the manual Excel and pivot-table-based process was eliminated. Data was cleansed, standardized, and mapped to common dimensions (product hierarchy, suppliers, time, and regions). DAX modeling in Power BI enabled creation of calculated metrics for shipment value, lead times, and stock movement. - Power BI Dashboard Development

The dashboard provided stakeholders with:- A time-period comparison of total shipment value.

- Drill-down capabilities to analyze performance at product, sub-brand, or supplier level.

- Insights into shipment delays, supplier performance, and defect rates.

- Automated alerts and KPIs for stockouts, overstock risks, and fulfillment gaps.

- Real-Time Monitoring & Alerts

The dashboards refreshed automatically on a daily/weekly basis, giving near real-time visibility. Notifications highlighted underperforming suppliers, late deliveries, and shipment anomalies, enabling proactive decision-making. - Scalable Architecture

The solution was designed for scalability with additional geographies, suppliers, and KPIs. SharePoint was integrated to host files that change based on availability, ensuring seamless updates without manual intervention.

This end-to-end automation transformed shipment analysis from a reactive, Excel-based process into a proactive, real-time system.

Business Impact

- Reduced Manual Effort: Automation removed dependency on manual Excel mapping and pivot tables, cutting reporting time significantly.

- Improved Visibility: Stakeholders gained access to a unified view of shipments across suppliers, regions, and product lines.

- Operational Efficiency: Early detection of stockouts, overstock, and supplier delays improved inventory balance and reduced costs.

- Faster Decision-Making: Interactive dashboards enabled managers to drill down to granular details and respond quickly.

- Enhanced Customer Satisfaction: Timely shipments and fewer disruptions improved service reliability and brand trust.

- Scalable Insights: The architecture allowed easy expansion to more datasets, ensuring long-term sustainability.

Technology Stack

- Data Sources: Nielsen, SharePoint, Excel, Supplier Data

- ETL & Data Processing: Alteryx, Python, Snowflake

- Visualization & Analytics: Power BI Desktop & Power BI Services (DAX modeling, interactive dashboards, automated alerts)

- Collaboration & Automation: SharePoint for file hosting, Power BI Services for secure sharing and role-based access

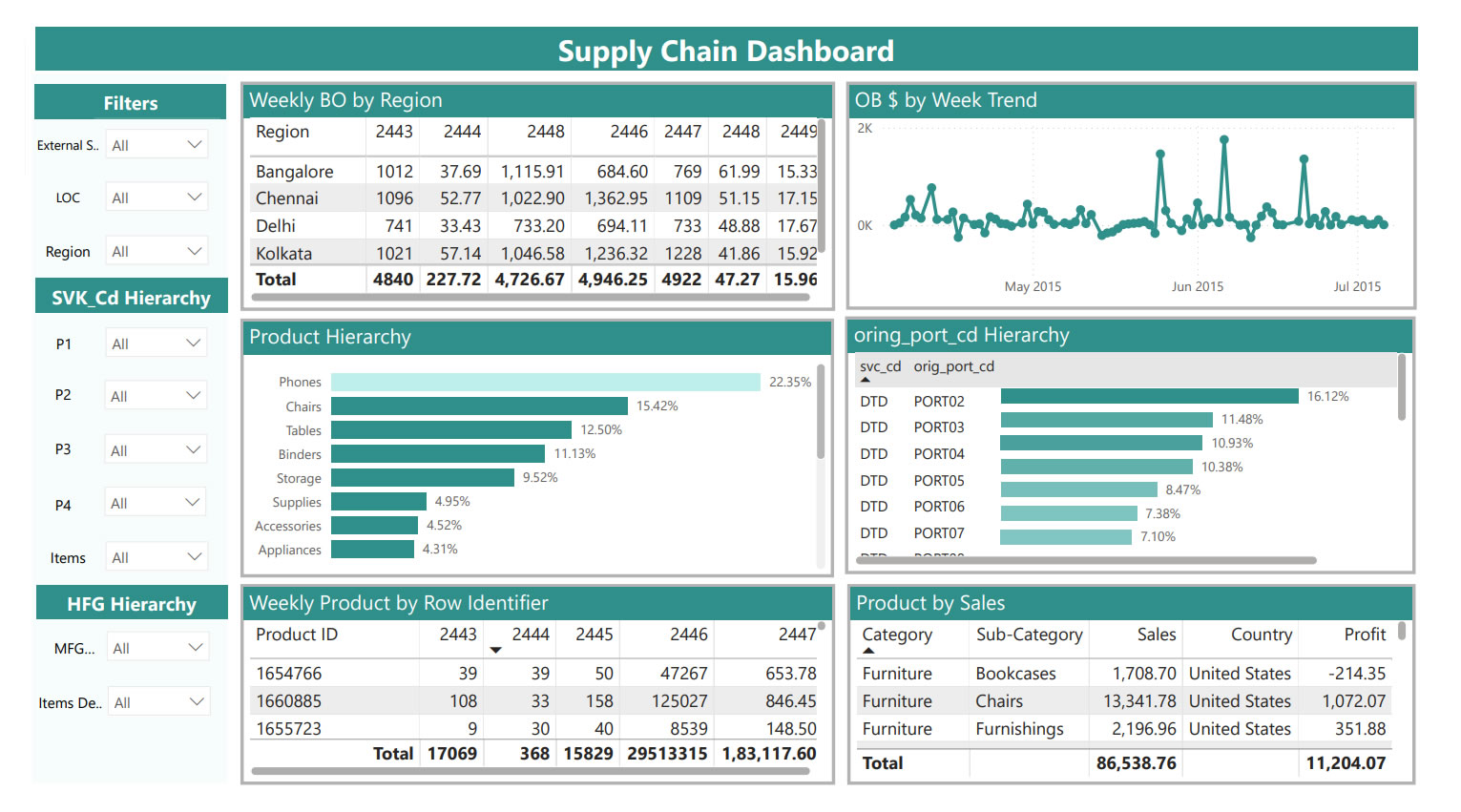

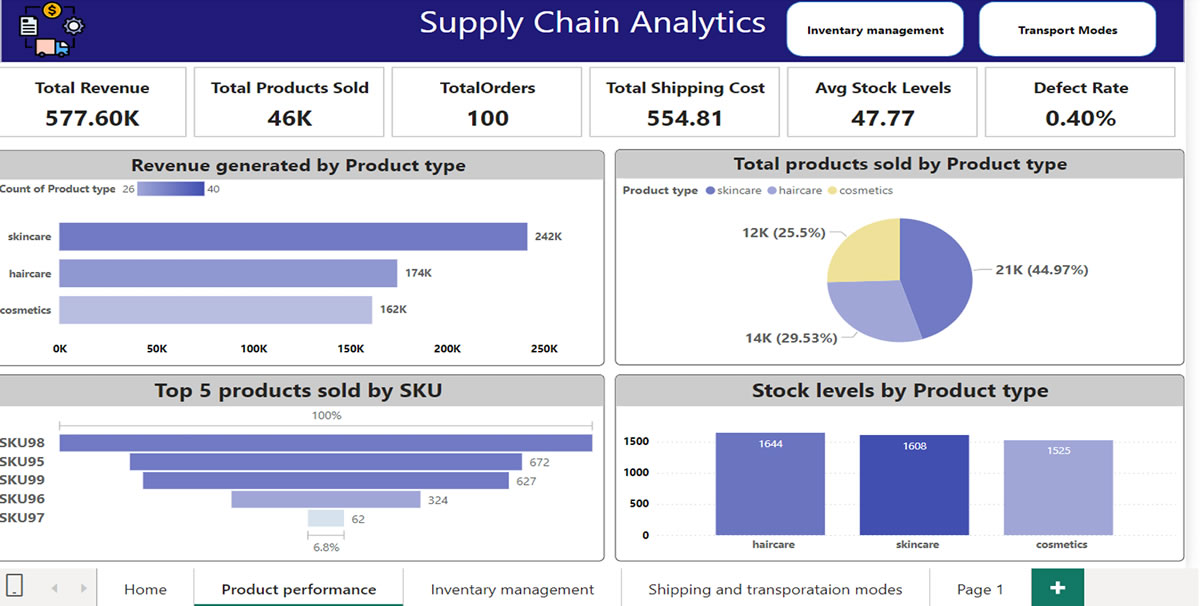

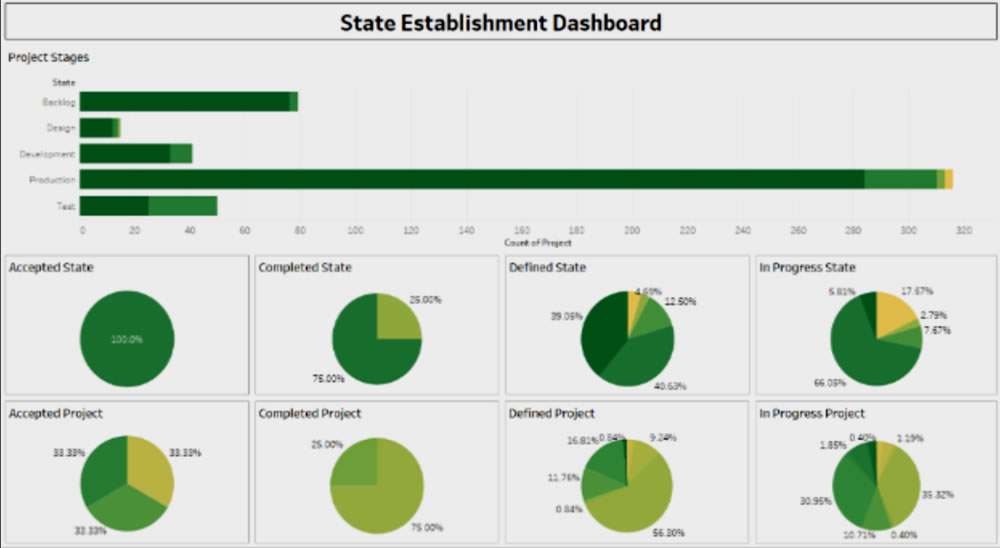

- Supply Chain Analytics

Challanges

Scope of Project

Scope of Project

- Inventory Optimization: Automated alerts were implemented for both low stock levels and excess inventory, ensuring timely replenishment and avoiding unnecessary overstocking.

- Supplier Performance Management: Dashboards tracked supplier lead times and order fulfillment rates, enabling the identification of reliable partners and quick action against underperforming suppliers.

- Logistics & Shipping Efficiency: Analytics were used to monitor shipping costs and optimize delivery routes. Real-time tracking of delivery times helped reduce delays and improve on-time fulfillment.

- Quality Control Monitoring: Dashboards monitored defect rates and inspection results, allowing the business to identify recurring issues and reduce returns.

- Predictive Insights: Sales trends and demand forecasting models provided better planning capability, helping to align production with actual consumer demand.

Business Impact

- Reduced Stockouts and Excess Inventory, improving product availability while lowering carrying costs.

- Improved Supplier Relationships through data-backed performance evaluations and stronger delivery reliability.

- Optimized Logistics Costs by reducing shipping inefficiencies and minimizing delays.

- Enhanced Product Quality with better defect tracking, leading to fewer returns and stronger brand reputation.

- Greater Customer Satisfaction driven by reliable product availability and faster, more efficient delivery.

Technology stack

- Data Integration & Preparation: SQL, Excel

- Visualization & Dashboards: Power BI / Tableau

- Data Analytics & Forecasting: Statistical models for demand forecasting and supplier performance analysis

- Automation: Alerts and notifications for inventory and supplier KPIs

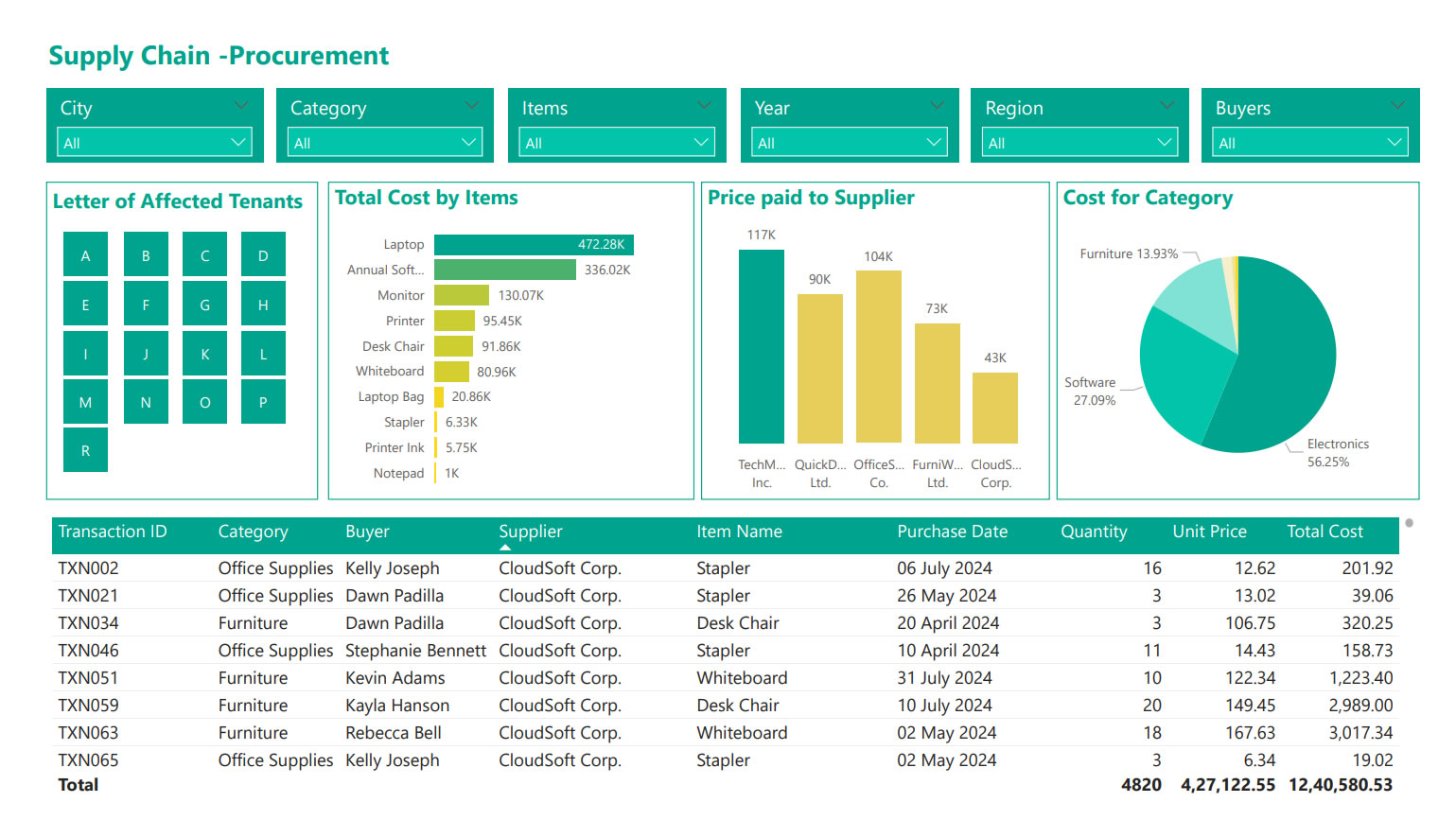

- Supply Chain -Procurement

The Challenge

- Lack of unified reporting and performance visibility across procurement operations.

- Manual preparation of reports caused delays in delivering actionable insights to senior management.

- Difficulty in consolidating and validating data across departments to ensure consistency.

- Limited visualization tools and lack of interactivity in reports.

Executive Summary

Scope of Project

- Track procurement process efficiency and performance metrics.

- Automate the generation of daily, weekly, monthly, and quarterly reports.

- Enable senior management to make informed business decisions through interactive dashboards.

Solution

-

Data Analysis & Visualization

-

Used Tableau to design multiple visualization types:

- Dual Axis Charts, Combo Charts, Pie & Bar Charts, Geographic & Heat Maps, Small Multiples.

- Implemented drill-through reports, hierarchies, and quick filters to enhance interactivity.

- Created calculated fields, parameters, and custom labels for precision in reporting.

-

Used Tableau to design multiple visualization types:

-

Data Integration & Automation

- Blended Excel-based data sources with Tableau for real-time updates.

- Designed and validated Tableau business scenarios to ensure accurate reporting.

- Published data

- extracts and dashboards to Power BI Online Services, enabling cloud access.

- Scheduled automated data refreshes on a daily, weekly, and monthly basis.

- MIS Reporting & Management Insights

- Developed interactive dashboards for procurement and client service metrics.

- Created clear and concise reporting formats for senior management reviews.

- Conducted weekly meetings to align data interpretation across departments.

Business Impact

Impact Area | Results Achieved |

Operational Efficiency | Reduced report preparation time by 60% through automation and pre-scheduled data extracts. |

Decision-Making | Delivered actionable insights with interactive dashboards, enabling faster procurement decisions. |

Data Accuracy | Improved consistency and trust in reporting with validated data and automated refreshes. |

Process Optimization | Visual procurement trend analysis led to process improvements and better vendor negotiations. |

Executive Visibility | Senior management gained real-time access to procurement performance metrics, enhancing strategic planning. |

Collaboration | Weekly data review meetings created a standardized approach to procurement analytics across teams. |

Technology Environment

- Visualization: Tableau

- Data Source: Excel

- Publishing & Scheduling: Power BI Online Services

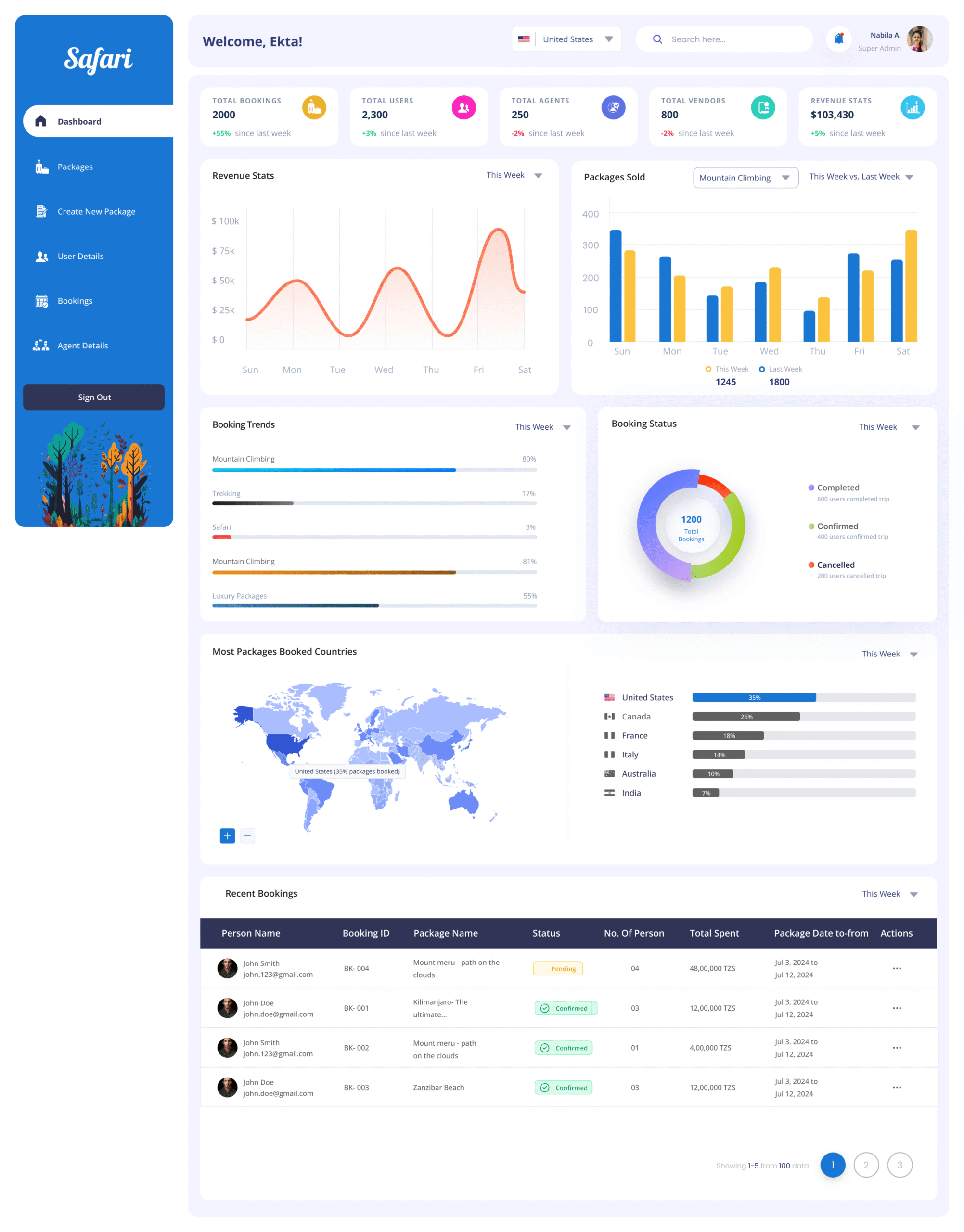

- “SuperStore Dashboard” for Retail Analytics

Industry :

The Challenge

- Fragmented sales data: Sales, product, customer, and regional data were spread across several systems (POS, inventory, CRM), making integrated analysis difficult.

- Slow report turnaround: Business users had to wait for periodic static reports to understand performance; real-time or near-real-time insights were lacking.

- Lack of product & regional visibility: Hard to spot which products are under-performing, which regions or stores are lagging, or how sales are trending over time.

- Difficulty in monitoring profitability: Margins, discount impacts, cost of goods sold, and returns were not visible in the same view, making profitability analysis cumbersome.

- Limited interactivity: Reports were largely static; users could not drill down, filter dynamically, or compare scenarios effectively.

Scope of Project

- Bring together data from multiple sources (sales, inventory, customers, returns, channels) into one unified view.

- Provide real-time or near real-time insights into sales trends, product performance by category, regional/store comparisons, customer segments, and profit margins.

- Offer interactive features including filtering, drill downs (e.g., by product, region, time), top/bottom product lists, trend lines.

- Enable business users (store managers, merchandisers, senior management) to monitor KPIs such as sales growth, average order value, discount performance, returns rate, inventory turnover.

- Provide visual tools to analyze promotions, seasonal effects, product category performance, channel contributions etc.

Solution Provided

- Designed and built a Tableau Dashboard (“SuperStore Dashboard”) combining multiple views:

- Sales over Time: Trend lines and month-by-month comparisons.

- Region/Store Performance: Maps or bar charts comparing performance across geography.

- Product & Category Analysis: Top selling products, margin by category, discount impact.

- Customer Segmentation: Repeat vs new customers, average purchase size.

- Returns & Profitability: Return rates and margin erosion by discount or product type.

- Data integration setup:

- Combined data from POS, inventory, return systems, CRM.

- Data cleaning & transformation to standardize product/category naming, handle missing values etc.

- Dashboard interactivity:

- Filters (region, product category, time period).

- Drill down features (e.g. store → city → region).

- Parameter controls for user-selected scenarios (e.g. comparing periods, applying discount thresholds).

- Performance optimization:

- Some pre-aggregated tables / extracts for faster loading.

- Using incremental refreshes where possible.

- Deployment & sharing:

- Published dashboards to Tableau Server / Tableau Public for widest access.

- Role-based access: store managers vs regional managers vs senior executives.

Business Impect

| Area | Results / Improvements |

| Speed of Insights | Reports that used to take days to generate are now available in real time or near-real time, enabling faster decision-making. |

| Product & Regional Visibility | Under-performing products and regions are identified sooner; corrective actions (promotions, stock reallocation) implemented faster. |

| Profitability Awareness | Better visibility into discounts, returns, and cost structure improved margin management. |

| Enhanced Sales Performance | Increased sales growth through better alignment of inventory with demand, promotion effectiveness, and identifying high potential products. |

| Operational Efficiency | Reduced manual effort in reporting; fewer ad-hoc requests for data; dashboard reuse lowered load on data teams. |

| User Engagement & Decision Support | Business users (store/regional/senior management) adopted dashboard as a “single source of truth”; used daily to inform strategy. |

Tools & Technology Environment

- Visualization & BI Tool: Tableau Desktop, Tableau Server / Tableau Public

- Data Sources: POS (point of sale) systems, inventory management systems, CRM, returns data, product master data

- Data Preparation / ETL: Data cleaning, transformation (product names, categories, returns data), standardizing time periods etc. Possibly SQL / database extracts or data warehouses

- Performance Optimizations: Data extracts / aggregations, incremental refresh, optimized filters and parameter use

- User Access / Security: Role-based permissions, sharing via dashboards (server / public or private), filters at user level

- Retail Procurement Analytics Transformation

Industry :

The Challenge

- Providing senior management with monthly, quarterly, and half-yearly performance reports in a clear and consistent format.

- Ensuring data consistency across departments by sharing validated insights weekly.

- Extracting and preparing procurement data from ServiceNow web services, which required complex parsing and transformation before being usable.

- Managing data in formats unsuitable for direct visualization, requiring advanced data modeling and cleansing.

- Understanding domain-specific procurement metrics in order to create meaningful dashboards and KPIs.

- Consolidate and analyse data across locations and product lines.

- Implement secure, role-based access to sensitive data.

- Improve reporting performance and reduce manual effort.

Solution

A comprehensive data preparation and visualization framework was implemented, integrating Alteryx for data processing and Tableau/Power BI for reporting. The approach included:

- Data Extraction & Transformation

- Used Alteryx to connect with ServiceNow, parse unstructured data, and perform RegEx-based pattern matching.

- Modeled and transformed data into Tableau-compatible .TDE files for efficient use.

- Interactive Dashboards & Reports

- Designed dual-axis charts, combo charts, heat maps, geographic maps, small multiples, and drill-through reports to provide multi-dimensional insights.

- Developed calculated fields and parameters for custom KPIs aligned with procurement goals.

- Built interactive dashboards tailored to management requirements.

- Performance & MIS Reporting

- Automated generation of daily, weekly, and monthly procurement reports.

- Created MIS and performance dashboards for procurement and client service teams.

- Designed standardized reporting formats for consistent communication across departments.

- Publishing & Scheduling

- Published dashboards and data sources to Power BI Online Services.

- Scheduled refreshes (daily/weekly/monthly) to ensure real-time accuracy.

Business Impect

- Improved Procurement Efficiency: Senior management gained clear visibility into procurement KPIs, enabling faster and more accurate decision-making.

- Time Savings in Data Preparation: Automated data extraction and transformation with Alteryx reduced manual effort by over 40%.

- Faster Insights: Interactive dashboards allowed executives to drill down into procurement details instantly, cutting analysis turnaround times from weeks to hours.

- Consistency Across the Organization: Weekly reviews ensured that all departments had access to standardized, reliable data for planning and execution.

- Scalable Reporting Framework: The solution enabled easy addition of new data sources, KPIs, and visualization requirements without disrupting existing workflows.

Tools & Technology Environment

- Data Preparation: Alteryx (Parsing, RegEx, Data Extraction)

- Visualization: Tableau, Power BI

- Data Sources: Service Now Web Services

- Tableau Integration for a Leading Retail & Manufacturing Steel Supplier

Industry :

The Backgroud

A leading retail and manufacturing steel supplier offers a wide range of steel products catering to multiple industry segments. With a robust distribution network and value-added services such as channel financing, doorstep delivery, and customised steel processing, the company serves both small and bulk buyers across Asia and North America.

The Challenge

The company generated large volumes of sales and operational data on a daily, weekly, and monthly basis, segmented by product type and location. The existing reporting process was fragmented, making it difficult for business teams to quickly analyse data, monitor performance, and make informed decisions. The company needed a powerful analytics platform integrated with its existing system to:

- Consolidate and analyse data across locations and product lines.

- Implement secure, role-based access to sensitive data.

- Improve reporting performance and reduce manual effort.

Scope of Project

- Tableau integration and configuration with the current platform.

- Development and testing of interactive dashboards.

- Matching Tableau Server authentication with the current platform.

- OLAP cube design, validation, and performance optimisation.

- Post-implementation support and maintenance.

Solution Delivered

The project involved the complete lifecycle implementation of Tableau analytics, ensuring seamless integration with the client’s existing systems. Key activities included:

- Custom Authentication & Security: Implemented Tableau Server authentication aligned with the current platform, along with cube-level and report-level security to safeguard sensitive information.

- Cube Design Validation: Verified all measures, dimensions, hierarchies, and calculated metrics for accuracy.

- Security Validation: Mapped cube roles to user groups and ensured correct access privileges.

- Performance Optimisation: Enhanced data processing speed and query performance for faster dashboard load times.

- Post-Implementation Support: Provided ongoing technical and user support for smooth adoption.

Business Impect

- 60% Reduction in Report Generation Time: Automated dashboards replaced manual reporting, enabling near real-time data access.

- Improved Decision-Making: Management could now access role-specific, accurate insights in minutes instead of hours.

- Enhanced Data Security: Role-based authentication ensured that sensitive sales and operational data was only visible to authorised users.

- Increased Operational Efficiency: Faster performance and automated analytics allowed business teams to focus more on strategy rather than data preparation.

- Scalability for Growth: The integrated Tableau platform could now easily handle growing data volumes and new reporting requirements.

Tools & Technology Environment

- Data Preparation: Alteryx (Parsing, RegEx, Data Extraction)

- Visualization: Tableau, Power BI

- Data Sources: Service Now Web Services

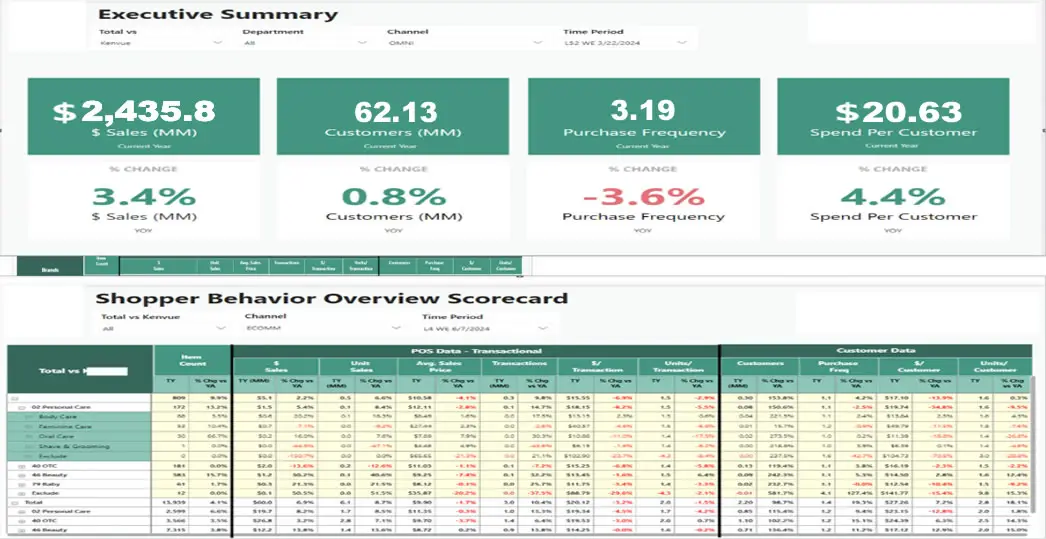

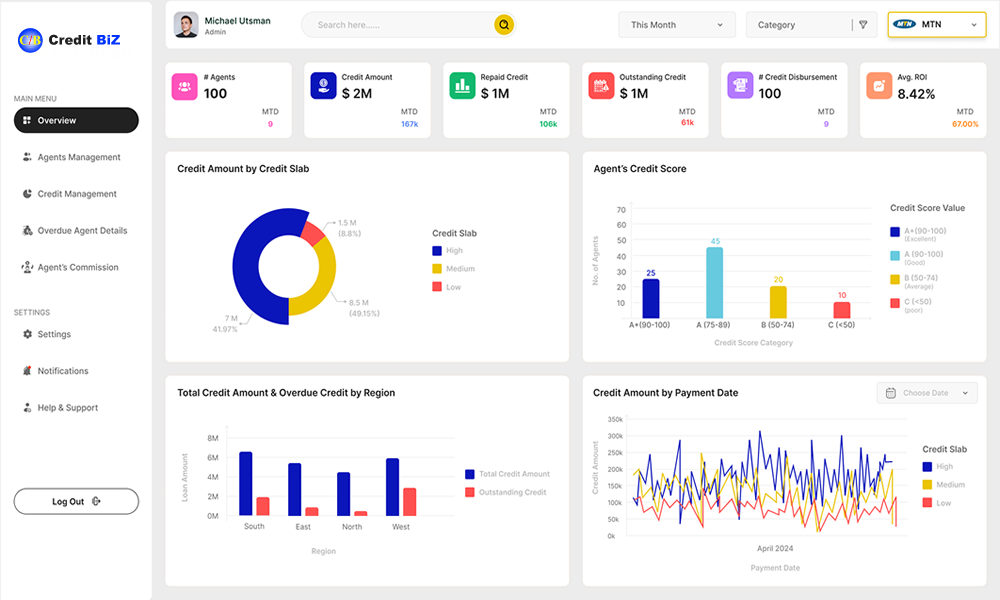

- Sales

The Challenge

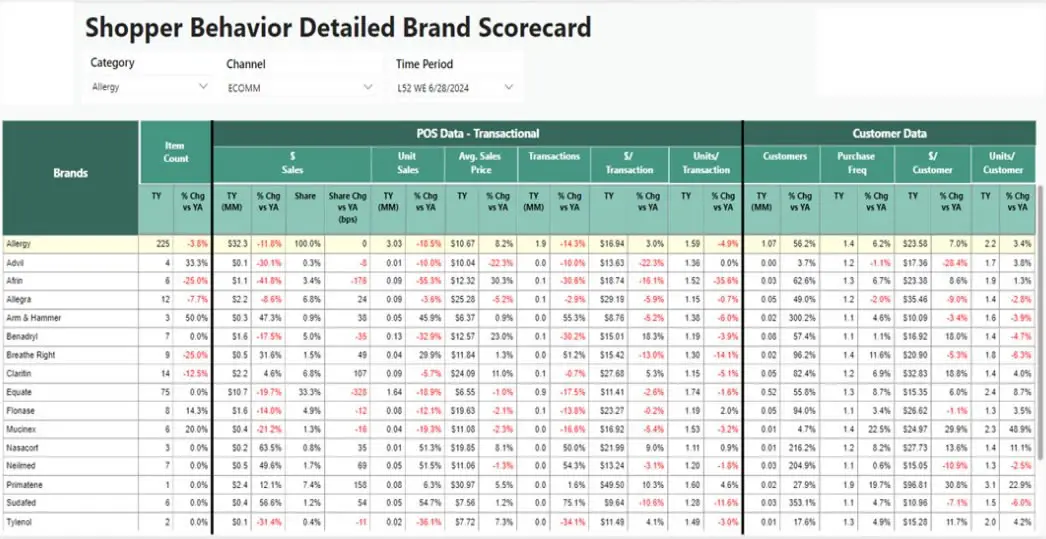

- Our Client Shopper Insights Project in which we built multiple Power BI Reports which provides in-depth transactional and customer data, offering insights into brand-level performance across multiple filters such as categories, channels, and time periods.

- Provides total market vs. brand performance at various levels, providing insights into transactional and customer purchase frequency.

- These reports comprise of aggregated metrics including sales, customer count, purchase frequency, and spend per customer, alongside their respective percentage changes.

- Using all the data which we downloaded from Luminate portal total 700 excels reports consists of multiple categorical data with different Segmentations and Time Periods to show the insights in the form of Power BI reports.

Scope of Project

- Automating & replicating manual approach of extracting Excel Reports, transforming them and refreshing Power BI Dashboards on a weekly cadence.

- A tool that leverages transactional and customer data to provide insights on the brand performance across channels and time periods.

Solution Delivered

- Built an automated streamlined process.

- Developed a data-optimized model to create a single source and increase performance.

- Built a Power BI dashboard based on provided UI design.

- Quality Process Optimization: Enhanced operational workflows and maintained consistent quality. ensured compliance with industry standards through rigorous quality control.

- Workload Reduction: Streamlined procedures and automated systems to reduce workload. achieved significant cost savings by minimizing rework and waste.

- Eliminated Human-Prone Errors: Implemented robust checks and automation to eliminate errors. increased accuracy and reliability, improving overall performance.

Business Impect

- Developed multiple Power BI reports to provide in-depth insights into transactional and customer data.

- Analyzed brand-level performance across categories, sales channels, and time periods.

- Reports offer comparative views of total market vs. brand performance, highlighting customer purchase behaviors.

- Key metrics include sales, customer count, purchase frequency, and spend per customer, with percentage changes.

- Sourced data from 700 Excel reports downloaded from the Luminate portal, containing segmented and time-based data.

- Enabled stakeholders to make data-driven decisions on brand strategies and market positioning.

- Provided clear, actionable insights into customer trends, helping to optimize resource allocation and enhance performance tracking.

Technology Stack

- Alteryx, Python, Selenium

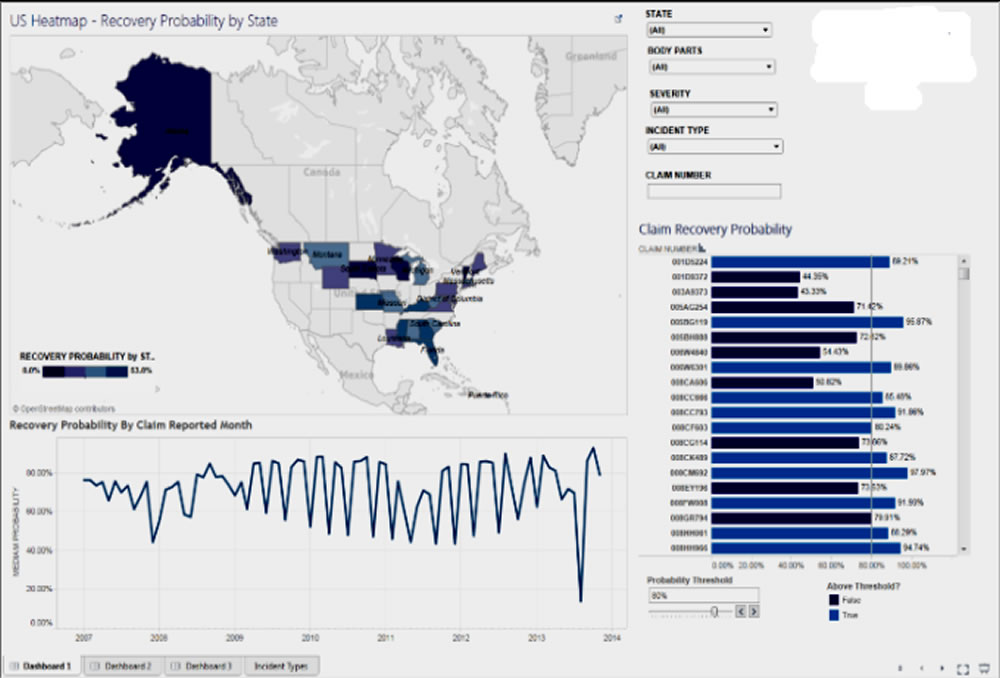

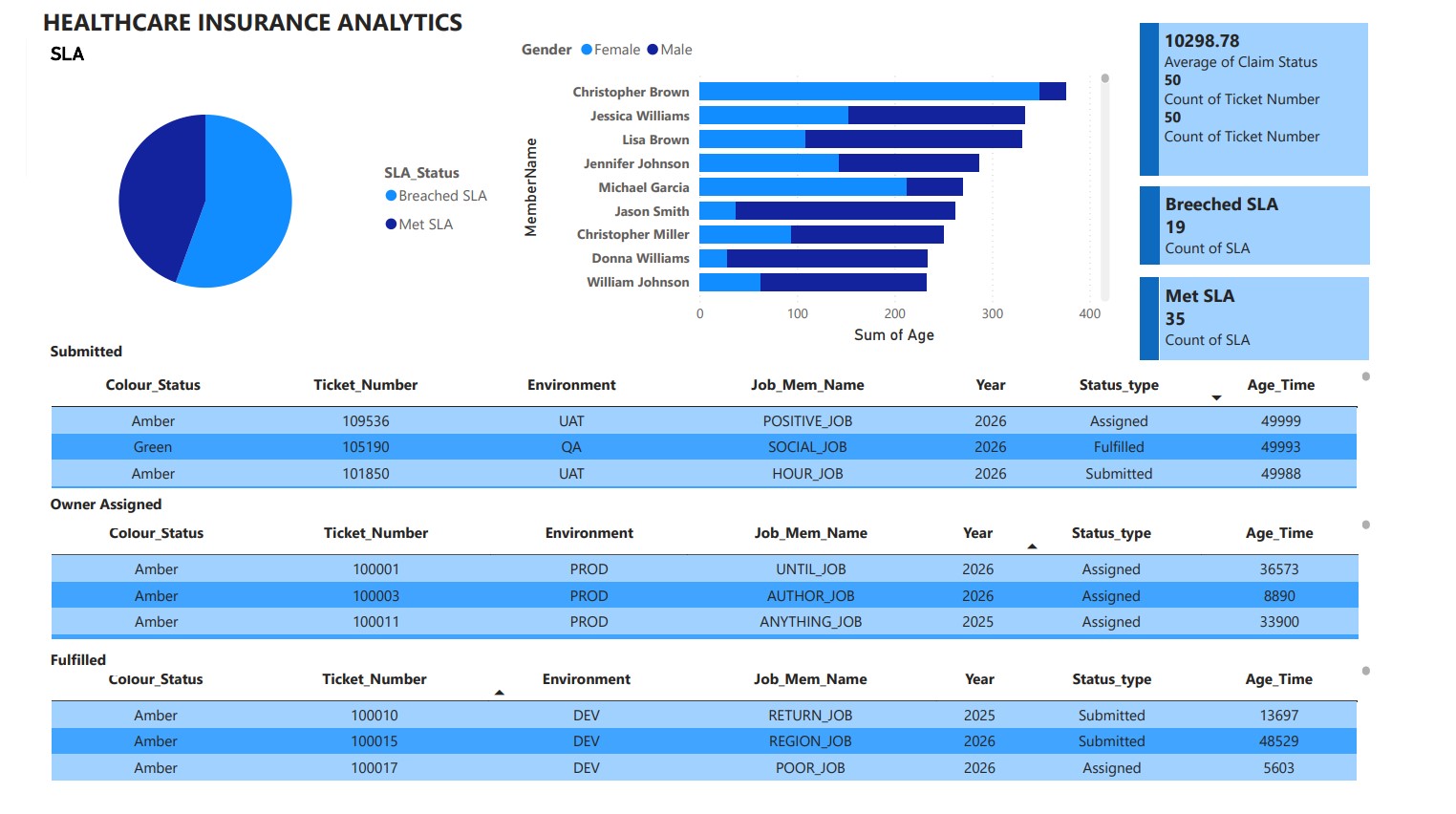

- Healthcare Insurance Analytics

Industry

The Challenge

- Developing interactive dashboards in Power BI / Power View.

- Integrating dashboards into a SharePoint portal for centralized access.

- Enabling scheduled report refreshes at defined intervals.

- Providing business users with role-specific reporting and secure authentication.

- Tracking and analyzing ticket resolution times, success rates, and failure trends for service request management.

Solution

- Power BI Integration with SharePoint

- Architected and developed dashboards in Power BI / Power View, embedding them into SharePoint via web parts.

- Configured different authentication levels to ensure user-specific access.

- Operational & Service Request Analytics

- Designed dashboards to track ticket submissions, pending items, closed requests, and resolution timeframes.

- Implemented success vs. failure rate tracking for service tickets.

- Automated Refresh & Scheduling

- Enabled scheduled report refreshes to ensure up-to-date data availability.

-

Ad-Hoc & Role-Based Reporting

- Created business-user-specific dashboards tailored to operational needs.

- Provided ad-hoc reporting capabilities for deeper analysis.

Business Impact

- Improved Operational Efficiency: Real-time visibility into ticket resolution times helped reduce average closure time by 30%.

- Centralized Access to Insights: SharePoint integration provided a single, secure portal for all reporting needs, reducing time spent searching for information.

- Enhanced Decision-Making: Role-based dashboards empowered managers and operational teams to address service issues proactively.

- Increased Data Accuracy: Automated refresh schedules ensured that all dashboards were updated with the latest available data.

- Scalability for Future Growth: The solution allowed easy addition of new reports and dashboards without major redevelopment.

Technical Architecture

- BI & Visualization: Power BI, Power View

- Collaboration: SharePoint

- Database: SQL Server 2008

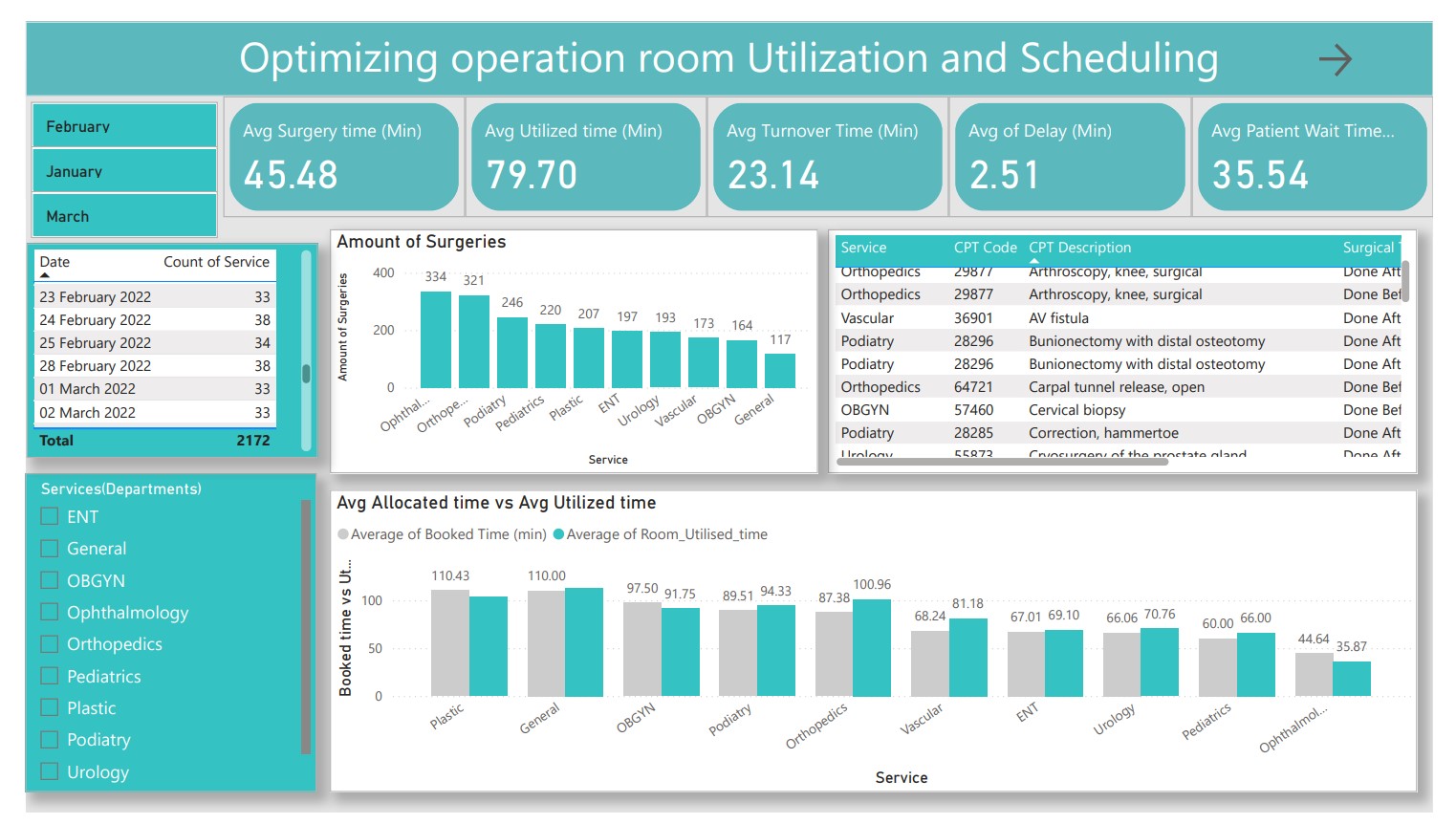

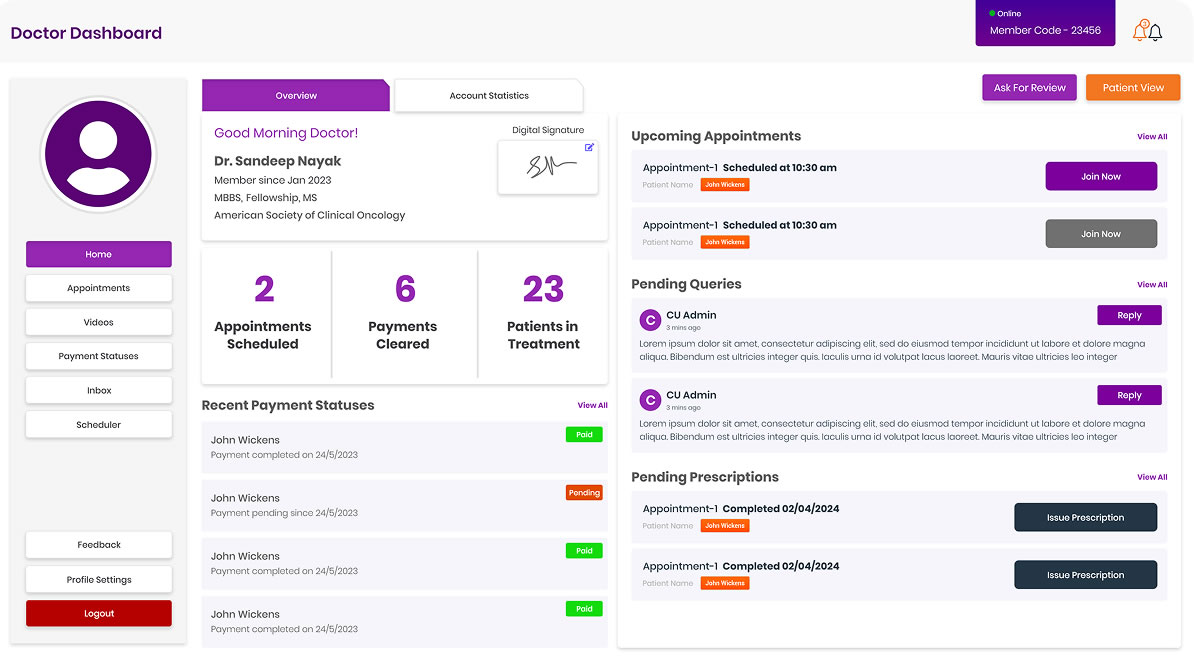

- HealthCare operating room (OR) utilization

Industry

Healthcare

The Challenge

- Underutilization of Operating Rooms (ORs): Idle time and inefficient use of available ORs.

- Scheduling Inefficiencies: Manual scheduling led to fragmented information across systems.

- Extended Turnover Times: Causing delays between surgeries.

- Patient Risks: Delays increased risks and patient dissatisfaction.

- Lack of Real-Time Visibility: Limited ability to track and monitor OR schedules dynamically.

Scope of Project

- Implement a data-driven solutionto optimize operating room utilization.

- Provide real-time visibility into OR scheduling, staff allocation, and delays.

- Enable management to identify peak usage, idle times, and resource constraints.

- Incorporate predictive analytics to forecast demand and improve planning.

Solution Provided

A comprehensive Power BI dashboard was developed to unify operating room utilization data, surgical schedules, and staff assignments into a single view. The project involved preparing and integrating data from multiple sources, ensuring consistency and accuracy before visualization.

The dashboard was designed to track key KPIs such as utilization rates, turnover times, and schedule adherence, while offering real-time monitoring that enabled hospital administrators to quickly adjust resources and reduce delays.

To support long-term planning, predictive analytics were incorporated, leveraging historical patterns to forecast OR demand and identify recurring bottlenecks. The dashboards were also built with interactive features, allowing users to drill down by department, surgeon, or time period, transforming reporting into a powerful decision-support tool.

- Developed a Power BI dashboard integrating OR utilization data, surgical schedules, and staff assignments.

- Data Preparation: Collected, cleaned, and transformed data from multiple sources using Excel and integrated into Power BI.

- KPI Tracking: Monitored utilization rates, turnover times, and adherence to planned schedules. Real-Time Monitoring: Enabled quick adjustments to scheduling and resource allocation.

- Predictive Analytics: Forecasted OR demand based on historical usage patterns.

- Interactive Dashboards: Allowed hospital management to analyze data, reduce delays, and make informed decisions.

Business Impact

- Increased OR Utilization: Reduced idle time and improved resource usage.

- Improved Revenue & Efficiency: Optimized scheduling increased throughput, resulting in higher operational efficiency.

- Reduced Patient Wait Times: Faster scheduling and reduced delays improved patient satisfaction.

- Enhanced Decision-Making: Real-time dashboards empowered leadership with actionable insights.

- Future-Readiness: Predictive analytics provided a foundation for continuous process improvements.

Technical Architecture

- Visualization Tool: Power BI (with DAX functions, calculated columns, interactive dashboards).

- Data Source: Excel, hospital scheduling systems.

- Data Processing: Data cleaning, transformation, and integration workflows.

- Analytics: Real-time dashboards, KPI visualization, predictive analytics models.

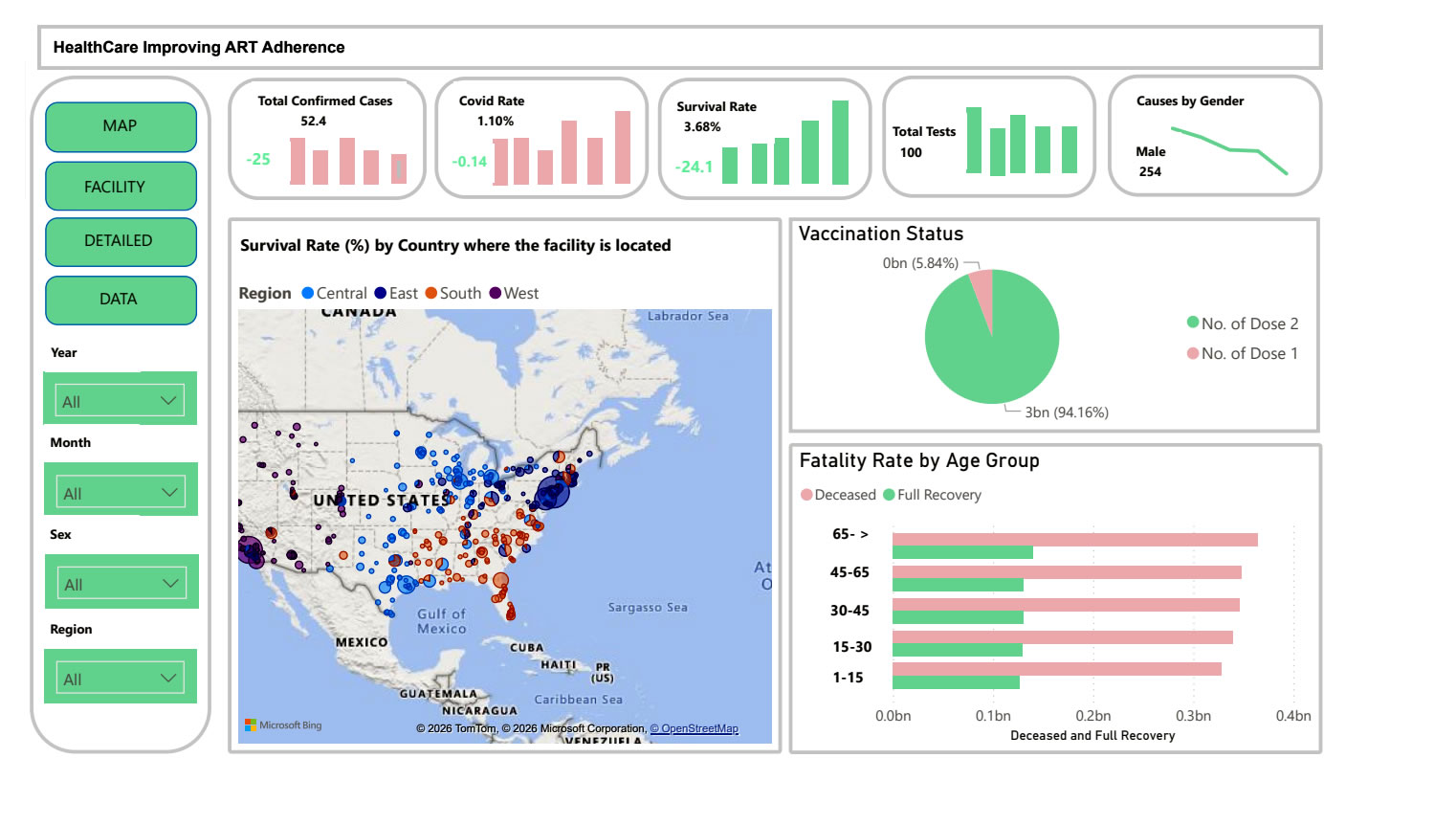

- HealthCare Improving ART Adherence

Challanges

Scope of Project

The project aimed to develop a real-time analytics solution that would enable healthcare providers to track ART adherence, monitor patient well-being, and manage the impact of external disruptions such as pandemics on treatment continuity. The focus was on creating a standardized, data-driven approach to improve patient support, optimize resource allocation, and ensure consistent healthcare delivery across all treatment centers.

Solution Provided

- A centralized Power BI dashboard was designed to bring together ART adherence data, patient well-being indicators, vaccination records, and regional performance metrics. This dashboard provided a unified view of treatment outcomes in near real time.

- Data preparation and integration involved consolidating information from multiple sources such as patient records, appointment logs, and survey data. This ensured that all metrics were accurate, up-to-date, and consistent.

- KPI monitoring allowed providers to track adherence levels, vaccination coverage, and treatment continuity, while automated alerts flagged at-risk patients requiring immediate follow-up.

- Power Apps forms were deployed to simplify data entry for healthcare staff, enabling quick updates on patient interactions, adherence checks, and follow-up visits.

- Interactive features allowed healthcare managers to drill down into specific clinics, demographic groups, or treatment outcomes, enabling precise interventions.

- Predictive analytics were applied to identify patients most likely to default on treatment, helping providers take preventive action.

Business Impact

- Improved adherence rates, reducing the risk of treatment failure and improving long-term health outcomes.

- Enhanced patient support, with early identification of mental health challenges such as anxiety and depression.

- Stronger continuity of care, with vaccination tracking and treatment monitoring reducing the risk of disruption.

- Efficient resource allocation, ensuring services were directed to the areas and patients that needed them most.

- Proactive decision-making, shifting the model from reactive crisis management to proactive healthcare delivery.

Technical Architecture

- Visualization & Analytics: Power BI (real-time dashboards, KPI monitoring)

- Data Collection & Entry: Microsoft Power Apps (for staff data input and patient updates)

- Data Storage & Collaboration: SharePoint (central repository and data sharing)

- Data Processing & Integration: Excel and survey datasets integrated into the BI platform

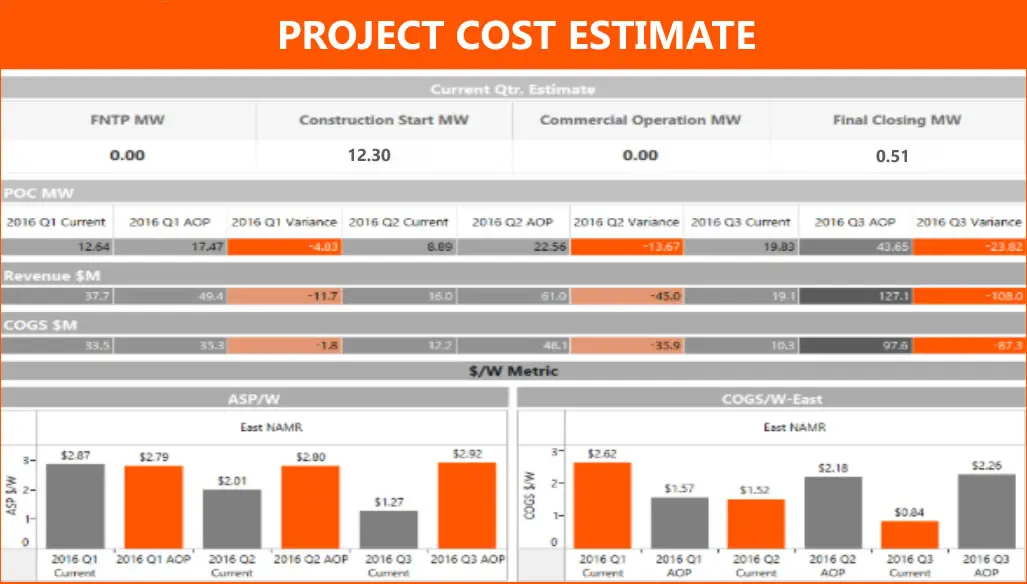

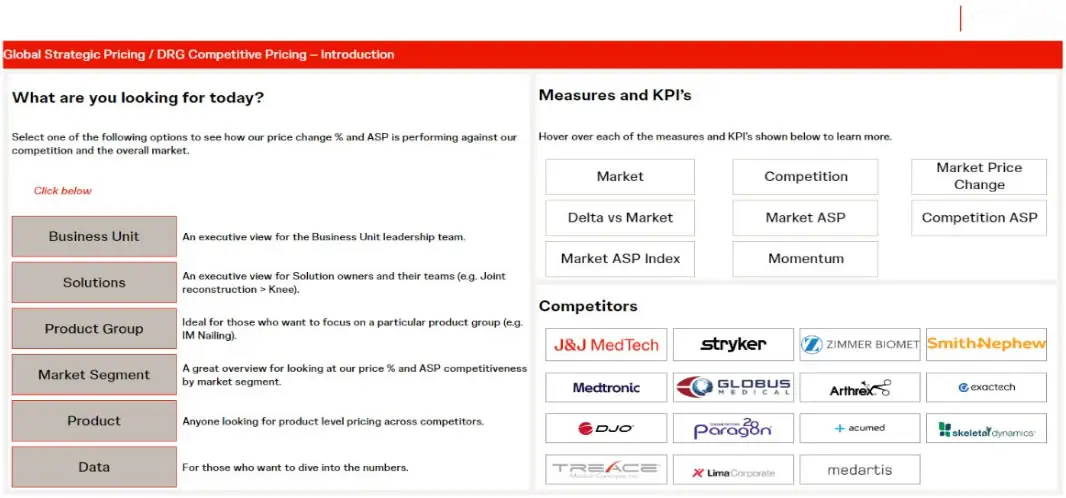

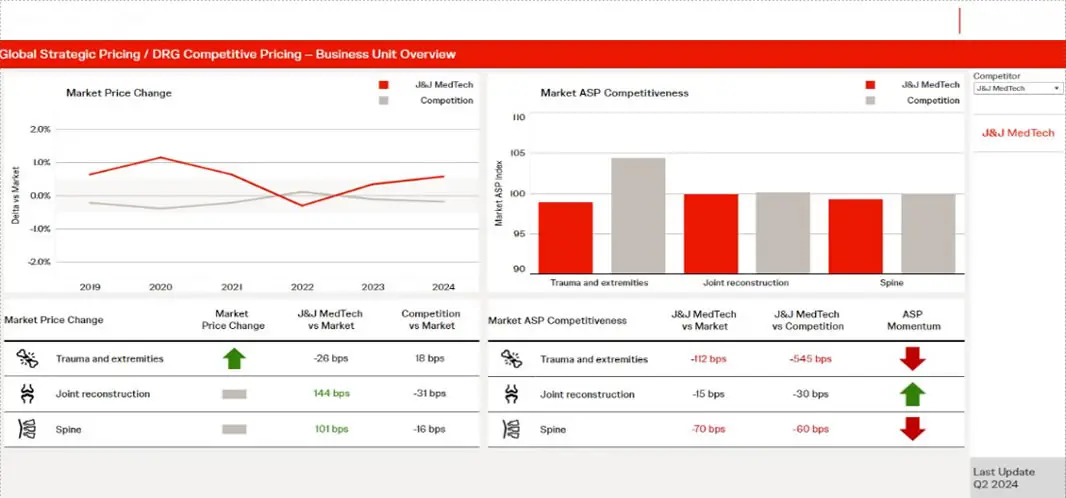

- Competitive Pricing Analytics

Challanges

The MedTech business faced significant hurdles in monitoring and comparing its pricing performance with competitors. There was limited visibility into Average Selling Price (ASP) and market pricing trends across platforms and regions. Competitor data was fragmented and difficult to access, making it challenging for business stakeholders to align their pricing strategies with market realities.

Without an integrated system, teams were spending significant time gathering data manually from disparate vendors and validating it, which delayed decision-making. The lack of real-time, reliable insights also reduced the ability to react quickly to market changes or competitive moves.

Scope of Project

- Deliver competitive ASP and market pricing comparisons at both the platform and overall business level.

- Enable leadership and sales teams to analyze performance against competitors on a yearly basis.

- Provide intuitive and interactive visualizations to support faster, data-driven decision-making.

- Establish a scalable and reliable architecture for continuous market insights.

Solution Provided

- Data Integration and Preparation

- Collected and consolidated pricing and market data from multiple external vendors such as DRG and ECRI.

- Data was ingested into Amazon Redshift where it was standardized, cleaned, and validated.

- Additional validation and cross-checks were performed using SQL queries and Excel-based rules to ensure accuracy.

- Visualization and Analytics

-

A Tableau dashboard was designed to display key pricing metrics including:

- Market Price (sales/volume by country, product hierarchy, and year).

- Construct ASP and ASP momentum.

- Competitive comparisons for ASP vs competition, both current and year-over-year.

- Dashboards were made interactive, allowing users to filter by country, region, platform, and competitor to quickly analyze specific market segments.

-

A Tableau dashboard was designed to display key pricing metrics including:

- Business Usability and Insights

- Provided a customer-centric market view, allowing pricing and sales teams to align strategies with competitive benchmarks.

- Incorporated ASP momentum tracking to evaluate changes against prior years and adjust pricing tactics.

- Enabled stakeholders to access dashboards through Tableau Server, ensuring real-time accessibility and collaboration across global teams.

- Scalability and Future Readiness

- The architecture was designed for easy expansion to include new vendors, additional geographies, and updated KPIs.

- Automated refreshes ensured that the dashboards always reflected the most current market data.

Business Impact

- Faster and Better Pricing Decisions: Decision-makers gained the ability to compare ASPs across competitors instantly, rather than relying on delayed manual reports.

- Improved Market Responsiveness:Real-time dashboards allowed pricing teams to react quickly to competitor moves and adjust strategies to maintain market competitiveness.

- Operational Efficiency: Reduced manual data collection and validation efforts, freeing up resources for deeper strategic analysis.