- Text-to-Speech Gen AI Use Case: Enhancing Customer Experience with Dynamic Voice Interactions

Business Challenge/Problem Statement

- Inefficient Data Analysis: Manual transcription and analysis of voice data are slow, preventing timely insights into customer sentiment, operational inefficiencies, or market trends.

- Suboptimal Customer Service: Inability to quickly analyze customer calls for common issues, agent performance, or compliance risks leads to missed opportunities for service improvement and personalized support.

- Limited Accessibility and Searchability: Audio and video content without accurate transcripts are inaccessible to hearing-impaired individuals and difficult to search, hindering content discovery and utilization.

- Compliance and Regulatory Risks: In regulated industries, accurate and comprehensive records of voice communications are crucial for compliance, but manual processes or inaccurate STT can lead to gaps and risks.

- High Operational Costs: Relying on human transcribers for large volumes of audio data is expensive and does not scale efficiently with growing business needs.

Scope of Project

- High-Accuracy Transcription: Developing and training generative AI models to achieve state-of-the-art accuracy in transcribing spoken language, even in challenging conditions such as background noise, diverse accents, and rapid speech.

- Speaker Diarization: Implementing capabilities to accurately identify and separate individual speakers in a conversation, providing clear attribution for each transcribed segment.

- Natural Language Understanding (NLU) Integration: Integrating NLU capabilities to extract deeper meaning from transcribed text, including sentiment analysis, entity recognition, topic detection, and keyword extraction.

- Real-time and Batch Processing: Supporting both real-time transcription for live interactions (e.g., customer calls, virtual meetings) and efficient batch processing for large volumes of pre-recorded audio.

- Multi-language and Dialect Support: Expanding the system’s capabilities to accurately transcribe and understand multiple languages and regional dialects, ensuring global applicability.

- Customizable Acoustic and Language Models: Providing tools for clients to fine-tune acoustic models with their specific audio data and language models with industry-specific terminology, significantly improving accuracy for specialized use cases.

- API and SDK Development: Offering a comprehensive set of APIs and SDKs for seamless integration into existing enterprise applications, communication platforms, and data analytics tools.

- Scalability and Security: Designing the solution for high scalability to handle massive volumes of audio data and ensuring robust security measures to protect sensitive voice data and transcribed information.

- User Interface for Management and Analytics: Developing an intuitive user interface for managing transcription jobs, reviewing transcripts, and visualizing extracted insights and analytics.

Solution we Provided

- Superior Transcription Accuracy: Leveraging cutting-edge deep learning models, our STT engine delivers industry-leading accuracy, even in challenging audio environments. It excels at transcribing diverse accents, handling overlapping speech, and filtering out background noise, ensuring reliable conversion of spoken words into text.

- Intelligent Speaker Diarization: Our solution precisely identifies and separates individual speakers within a conversation, providing clear attribution for each segment of the transcript. This is crucial for understanding conversational flow, analyzing individual contributions, and improving the readability of multi-party dialogues.

- Advanced Natural Language Understanding (NLU): Beyond mere transcription, our system integrates powerful NLU capabilities. It automatically performs sentiment analysis to gauge emotional tone, extracts key entities (e.g., names, dates, products), identifies prevalent topics, and highlights critical keywords. This transforms raw text into structured, searchable, and insightful data.

- Flexible Processing Modes: We offer both real-time STT for immediate applications like live call transcription, virtual assistant interactions, and meeting minutes, as well as high-throughput batch processing for large archives of pre-recorded audio. This flexibility caters to diverse operational needs and workflows.

- Extensive Language and Dialect Support:Our models are trained on vast datasets covering numerous languages and their regional dialects, ensuring comprehensive global coverage and accurate transcription for a diverse user base. This enables businesses to serve international markets effectively.

- Customizable Models for Enhanced Performance: Clients can significantly improve transcription accuracy for their specific domain by fine-tuning our acoustic models with their proprietary audio data and adapting language models with industry-specific jargon, product names, and acronyms. This customization ensures optimal performance for specialized use cases like medical dictation or legal proceedings.

- Developer-Friendly API and SDKs: Our solution provides a robust, well-documented API and comprehensive SDKs (Software Development Kits) for seamless integration into existing applications. This allows developers to easily embed STT capabilities into CRM systems, communication platforms, analytics dashboards, and custom business applications.

- Scalable, Secure, and Compliant Architecture: Built on a cloud-native, microservices architecture, our solution is designed for massive scalability, capable of processing petabytes of audio data. We adhere to stringent security protocols and compliance standards (e.g., GDPR, HIPAA) to protect sensitive voice data and ensure data privacy.

- Intuitive Analytics Dashboard: A user-friendly web interface provides tools for managing transcription jobs, reviewing and editing transcripts, and visualizing NLU-derived insights through interactive dashboards. This empowers users to quickly gain actionable intelligence from their voice data.

Technical Architecture

- Machine Learning Frameworks:

- TensorFlow/PyTorch: Utilized for building and training advanced deep neural networks, including recurrent neural networks (RNNs), convolutional neural networks (CNNs), and Transformer models, which are essential for high-accuracy acoustic modeling and language understanding in STT systems.

- Cloud Infrastructure:

- Google Cloud Platform (GCP)/Amazon Web Services (AWS)/Microsoft Azure: Leveraging cloud-agnostic principles, the solution can be deployed on leading cloud providers. This provides access to scalable compute resources (GPUs/TPUs), object storage (e.g., S3, GCS), and managed services for databases and message queues, ensuring high availability, global reach, and elastic scalability.

- Programming Languages:

- Python: The primary language for AI/ML development, data processing, and backend services, chosen for its rich ecosystem of libraries (e.g., NumPy, Pandas, Scikit-learn) and frameworks for machine learning.

- Go/Java (for High-Performance Microservices): Used for building high-performance, low-latency microservices and API gateways that handle real-time audio streaming, transcription requests, and data orchestration.

- Database and Storage:

- NoSQL Databases (e.g., Cassandra, DynamoDB): For storing large volumes of unstructured and semi-structured data, such as audio metadata, transcription logs, and NLU-extracted insights, offering high scalability and flexibility.

- Object Storage (e.g., AWS S3, Google Cloud Storage): For efficient and cost-effective storage of raw audio files, processed audio, and large datasets used for model training.

- Containerization and Orchestration:

- Docker: For packaging the STT application and its dependencies into lightweight, portable containers, ensuring consistent deployment across development, testing, and production environments.

- Kubernetes: For orchestrating containerized applications, automating deployment, scaling, and management of the STT services, ensuring high availability and fault tolerance.

- API Management and Communication:

- RESTful APIs/gRPC: Providing secure, high-performance interfaces for client applications to interact with the STT engine, supporting both synchronous and asynchronous communication patterns.

- Kafka/RabbitMQ: For building robust, scalable message queues to handle real-time audio streams and asynchronous processing of large audio batches.

- Version Control and CI/CD:

- Git/GitHub/GitLab: For collaborative development, version control, and managing code repositories.

- Jenkins/GitHub Actions/GitLab CI/CD: For automated testing, continuous integration, and continuous deployment pipelines, ensuring rapid and reliable delivery of updates and new features.

- Monitoring and Logging:

- Prometheus/Grafana: For real-time monitoring of system performance, resource utilization, and service health, providing dashboards for operational insights.

- ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging, analysis, and visualization of system logs, aiding in troubleshooting, performance optimization, and security auditing.

- Text-to-SQL Gen AI Use Case: Empowering Business Users with Natural Language Database Access

Business Challenge/Problem Statement

- Delayed Insights: The dependency on technical teams creates a backlog of data requests, delaying access to critical information and slowing down decision-making processes.

- Limited Self-Service Analytics: Business users are unable to independently explore data, ask follow-up questions, or conduct iterative analysis, hindering agile business intelligence.

- Increased Workload for Technical Teams: IT and data teams are overwhelmed with routine data extraction tasks, diverting their focus from more strategic initiatives like data infrastructure development or advanced analytics.

- Underutilized Data Assets: The inability of non-technical users to directly interact with databases means that valuable data often remains untapped, limiting its potential to drive business value.

- Miscommunication and Misinterpretation: Translating business questions into technical SQL queries can lead to misunderstandings, resulting in incorrect data retrieval or irrelevant insights.

There is a critical need for a solution that democratizes data access, allowing non-technical users to query databases using natural language, thereby empowering them to gain immediate insights and make data-driven decisions without relying on intermediaries.

Scope of Project

This project aims to develop and implement a generative AI-powered Text-to-SQL system that enables users to query relational databases using natural language. The scope includes:

- Natural Language Understanding (NLU) for Query Interpretation: Developing advanced NLU models capable of accurately interpreting complex natural language questions, understanding user intent, and identifying relevant entities and relationships within the database schema.

- SQL Query Generation: Building a robust generative AI engine that translates interpreted natural language queries into syntactically correct and semantically accurate SQL queries, optimized for various database systems (e.g., MySQL, PostgreSQL, SQL Server, Oracle).

- Schema Linking and Metadata Management: Implementing mechanisms to automatically understand and link natural language terms to the underlying database schema (tables, columns, relationships) and manage metadata effectively to improve query generation accuracy.

- Contextual Understanding and Conversation History: Incorporating capabilities to maintain conversational context, allowing users to ask follow-up questions and refine queries iteratively without re-stating the entire request.

- Error Handling and Feedback Mechanism: Designing a system that can identify ambiguous or unanswerable queries, provide intelligent feedback to the user, and suggest clarifications or alternative phrasing.

- Security and Access Control: Ensuring that the Text-to-SQL solution adheres to strict security protocols, including user authentication, authorization, and data access policies, to prevent unauthorized data exposure.

- Integration with Existing Data Infrastructure: Providing flexible APIs and connectors for seamless integration with various enterprise data sources, business intelligence tools, and data visualization platforms.

- Performance Optimization: Optimizing the query generation process for speed and efficiency, ensuring that insights are delivered in near real-time, even for complex queries on large datasets.

- User Interface Development: Creating an intuitive and user-friendly interface (e.g., web application, chatbot integration) that facilitates natural language interaction with the database and presents query results clearly.

Solution we Provided

- Intuitive Natural Language Interface: Users can simply type their questions in plain English (or other supported natural languages), just as they would ask a human data analyst. Our system leverages advanced Natural Language Understanding (NLU) to accurately interpret user intent, identify key entities, and understand the relationships between data points.

- Intelligent SQL Generation: At the core of our solution is a sophisticated generative AI engine that translates natural language queries into highly optimized and semantically correct SQL statements. This engine is trained on vast datasets of natural language questions and corresponding SQL queries, enabling it to handle complex joins, aggregations, filtering, and sorting operations across various database schemas.

- Dynamic Schema Understanding and Linking: Our system dynamically analyzes the database schema, including table names, column names, data types, and relationships. It intelligently links natural language terms to the appropriate database elements, even for non-standard naming conventions, ensuring accurate query generation without manual mapping.

- Contextual Awareness and Conversational Flow: The solution maintains conversational context, allowing users to ask follow-up questions and refine their queries iteratively. For example, a user can ask “Show me sales for Q1,” and then follow up with “Now show me by region” without re-specifying the initial query parameters.

- Robust Error Handling and User Guidance: In cases of ambiguous or incomplete queries, our system provides intelligent feedback, suggesting clarifications or alternative phrasing to guide the user towards a successful query. This proactive assistance minimizes frustration and improves the user experience.

- Enterprise-Grade Security and Access Control: We integrate seamlessly with existing enterprise security frameworks, ensuring that users can only access data for which they have authorized permissions. All generated SQL queries are validated against predefined security policies before execution, safeguarding sensitive information.

- Seamless Integration and Extensibility: Our solution offers flexible APIs and connectors, allowing for easy integration with existing data ecosystems, including business intelligence dashboards, data visualization tools, enterprise applications, and popular chat platforms. This ensures that data insights are accessible where and when they are needed.

- High Performance and Scalability: Designed for enterprise environments, our Text-to-SQL engine is optimized for speed and efficiency, capable of generating and executing complex queries on large datasets in near real-time. Its scalable architecture can handle a growing number of users and increasing data volumes without compromising performance.

- Auditability and Transparency: For compliance and debugging purposes, our system provides full audit trails of natural language queries, generated SQL, and query results, ensuring transparency and accountability in data access.

Technology Enviornment

Our generative AI Text-to-SQL solution is built upon a robust and scalable technology stack, designed for high performance, accuracy, and seamless integration into diverse enterprise data environments. The core components and technologies include:

- Machine Learning Frameworks:

- TensorFlow/PyTorch: Utilized for building and training advanced deep learning models, particularly large language models (LLMs) and transformer-based architectures, which are fundamental for Natural Language Understanding (NLU) and SQL generation.

- Cloud Infrastructure:

- Google Cloud Platform (GCP)/Amazon Web Services (AWS)/Microsoft Azure: Leveraging cloud-agnostic principles, the solution can be deployed on leading cloud providers. This provides access to scalable compute resources (GPUs/TPUs), managed database services, and object storage, ensuring high availability, global reach, and elastic scalability.

- Programming Languages:

- Python: The primary language for AI/ML development, NLU processing, and backend services, chosen for its extensive libraries (e.g., Hugging Face Transformers, SpaCy) and frameworks for machine learning and data manipulation.

- Java/Go (for High-Performance API and Data Connectors): Used for building high-performance, low-latency API gateways and data connectors that interact with various database systems and orchestrate query execution.

- Database Connectivity and Management:

- SQLAlchemy/JDBC/ODBC: For establishing secure and efficient connections to a wide range of relational database management systems (RDBMS) like MySQL, PostgreSQL, SQL Server, Oracle, and Snowflake.

- Metadata Stores (e.g., Apache Atlas, Custom Solutions): For managing and storing database schema information, table and column descriptions, and data lineage, crucial for accurate schema linking and contextual understanding.

- Containerization and Orchestration:

- Docker: For packaging the Text-to-SQL application and its dependencies into portable containers, ensuring consistent deployment across different environments.

- Kubernetes: For orchestrating containerized applications, automating deployment, scaling, and management of the Text-to-SQL services, ensuring high availability and fault tolerance.

- API Management and Communication:

- RESTful APIs/gRPC: Providing secure, high-performance interfaces for client applications to submit natural language queries and receive SQL results and data insights.

- Message Queues (e.g., Apache Kafka, RabbitMQ): For asynchronous processing of complex queries, managing query queues, and enabling real-time data streaming for analytics.

- Version Control and CI/CD:

- Git/GitHub/GitLab: For collaborative development, version control, and managing code repositories.

- Jenkins/GitHub Actions/GitLab CI/CD: For automated testing, continuous integration, and continuous deployment pipelines, ensuring rapid and reliable delivery of updates and new features.

- Monitoring and Logging:

- Prometheus/Grafana: For real-time monitoring of system performance, query execution times, and resource utilization.

- ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging, analysis, and visualization of system logs, aiding in troubleshooting, performance optimization, and security auditing.

This robust technology environment ensures that our generative AI Text-to-SQL solution is not only powerful and accurate but also highly scalable, secure, and easily maintainable, capable of meeting the demanding requirements of various enterprise data analytics needs.

- Text-to-Speech Gen AI Use Case: Enhancing Customer Experience with Dynamic Voice Interactions

Business Challenge/Problem Statement

Traditional text-to-speech (TTS) solutions often suffer from robotic, unnatural-sounding voices, lacking the intonation, emotion, and nuance required for engaging human-like interactions. This limitation significantly impacts customer experience in various sectors, including customer support, e-learning, content creation, and accessibility services. Businesses struggle to deliver personalized and empathetic voice interactions at scale, leading to:

- Poor Customer Engagement: Monotonous voices can disengage customers, leading to frustration and reduced satisfaction in automated systems.

- Limited Brand Representation: Brands find it challenging to convey their unique tone and personality through generic, synthetic voices.

- Inefficient Content Production: Creating high-quality audio content for e-learning modules, audiobooks, or marketing materials is often time-consuming and expensive, requiring professional voice actors.

- Accessibility Barriers: While TTS aids accessibility, unnatural voices can still pose comprehension challenges for users with cognitive disabilities or those who rely heavily on auditory information.

There is a clear need for a next-generation TTS solution that leverages generative AI to produce highly natural, emotionally intelligent, and customizable voices, capable of transforming digital interactions into rich, human-like experiences.

Scope of The Project

This project aims to develop and implement an advanced text-to-speech (TTS) system powered by generative AI, specifically designed to overcome the limitations of traditional TTS. The scope includes:

- Development of a Custom Voice Model: Training a generative AI model on a diverse dataset of human speech to create a highly natural and expressive voice. This model will be capable of generating speech with appropriate intonation, rhythm, and emotional nuances.

- Emotion and Tone Recognition: Integrating capabilities to detect and interpret emotional cues from input text, allowing the TTS system to generate speech that matches the intended sentiment (e.g., happy, sad, urgent).

- Multi-language and Accent Support: Expanding the system’s capabilities to support multiple languages and regional accents, ensuring global applicability and localized user experiences.

- API Integration: Providing a robust and easy-to-integrate API for seamless adoption across various platforms and applications, including customer service chatbots, virtual assistants, e-learning platforms, and content management systems.

- Scalability and Performance Optimization: Ensuring the solution is highly scalable to handle large volumes of text-to-speech conversions in real-time, with optimized performance for low-latency applications.

- User Customization: Allowing users to fine-tune voice parameters such as pitch, speaking rate, and emphasis, and potentially create unique brand voices.

- Ethical AI Considerations: Implementing safeguards to prevent misuse and ensure responsible deployment of the generative AI TTS technology, including addressing concerns around deepfakes and voice cloning.

Solution We Provided

Our generative AI-powered text-to-speech solution addresses the identified challenges by offering a sophisticated platform that transforms text into highly natural and emotionally rich spoken audio. Key features of our solution include:

- Human-like Voice Synthesis: Leveraging advanced neural networks and deep learning models, our system generates speech that closely mimics human intonation, rhythm, and pronunciation, significantly reducing the ‘robotic’ sound often associated with traditional TTS.

- Emotional Intelligence: The solution incorporates a sophisticated emotion recognition engine that analyzes the sentiment of the input text. This allows the AI to dynamically adjust the voice’s tone, pitch, and speaking style to convey appropriate emotions, such as empathy, excitement, or urgency, making interactions more engaging and relatable.

- Voice Customization and Branding: Clients can choose from a diverse library of pre-trained voices or work with us to create a unique brand voice. This includes fine-tuning parameters like accent, gender, age, and speaking pace, ensuring consistency with brand identity across all voice interactions.

- Multi-lingual and Multi-accent Support: Our solution supports a wide range of languages and regional accents, enabling businesses to cater to a global audience with localized and culturally appropriate voice content. This is crucial for international customer support, e-learning, and content distribution.

- Real-time Processing and Scalability: Engineered for high performance, the system can convert large volumes of text to speech in real-time, making it suitable for dynamic applications like live customer service calls, interactive voice response (IVR) systems, and real-time content generation. Its scalable architecture ensures consistent performance even during peak demand.

- Seamless API Integration: We provide a well-documented and easy-to-use API that allows for straightforward integration into existing applications and workflows. This includes web applications, mobile apps, content management systems, and enterprise software, minimizing development overhead for clients.

- Content Creation Efficiency: By automating the voiceover process with high-quality, natural-sounding voices, our solution drastically reduces the time and cost associated with producing audio content for e-learning modules, audiobooks, podcasts, marketing campaigns, and accessibility features.

- Ethical and Responsible AI: We prioritize ethical AI development, implementing robust measures to prevent misuse of voice synthesis technology. This includes watermarking generated audio and providing tools for content authentication, addressing concerns related to deepfakes and ensuring responsible deployment.

Technical Architecture

- Machine Learning Frameworks:

- TensorFlow/PyTorch: Utilized for building and training deep neural networks, particularly for advanced generative models like WaveNet, Tacotron, and Transformer-based architectures, which are fundamental to natural-sounding speech synthesis.

- Cloud Infrastructure:

- Google Cloud Platform (GCP)/Amazon Web Services (AWS)/Microsoft Azure: Leveraging cloud-agnostic principles, the solution can be deployed on leading cloud providers for scalable compute resources (GPUs/TPUs), storage, and managed services. This ensures high availability, global reach, and elastic scalability to handle varying workloads.

- Programming Languages:

- Python: The primary language for AI/ML development, data processing, and API backend services, due to its extensive libraries and frameworks for machine learning.

- Node.js/Go (for API Gateway/Microservices): Used for building high-performance, low-latency API gateways and microservices that handle requests and orchestrate interactions between different components of the TTS system.

- Database and Storage:

- NoSQL Databases (e.g., MongoDB, Cassandra): For storing large volumes of unstructured data, such as audio samples, voice models, and metadata, offering flexibility and scalability.

- Object Storage (e.g., Google Cloud Storage, AWS S3): For efficient and cost-effective storage of large audio datasets and generated speech files.

- Containerization and Orchestration:

- Docker: For packaging applications and their dependencies into portable containers, ensuring consistent deployment across different environments.

- Kubernetes: For orchestrating containerized applications, managing deployments, scaling, and ensuring high availability of the TTS services.

- API Management:

- RESTful APIs/gRPC: Providing well-defined interfaces for seamless integration with client applications, ensuring secure and efficient communication.

- Version Control and CI/CD:

- Git/GitHub/GitLab: For collaborative development, version control, and managing code repositories.

- Jenkins/GitHub Actions/GitLab CI/CD: For automated testing, building, and deployment pipelines, ensuring rapid and reliable delivery of updates and new features.

- Monitoring and Logging:

- Prometheus/Grafana: For real-time monitoring of system performance, resource utilization, and service health.

- ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging, analysis, and visualization of system logs, aiding in troubleshooting and performance optimization.

- Autonomous Agentic AI for Scientific Discovery

The Challenge

Traditional scientific research and drug discovery processes are slow, resource-intensive, and prone to human bias. Researchers often face bottlenecks due to:

- Manual literature review of vast scientific databases.

- Cognitive bias that limits exploration of unconventional hypotheses.

- High time and cost associated with hypothesis testing and simulations.

- Lack of scalability, as human researchers cannot operate continuously at large scale.

The goal was to design an autonomous, intelligent agent system capable of managing the entire R&D lifecycle—from hypothesis generation to experiment design, analysis, and recommendation—dramatically accelerating innovation.

Scope of Project

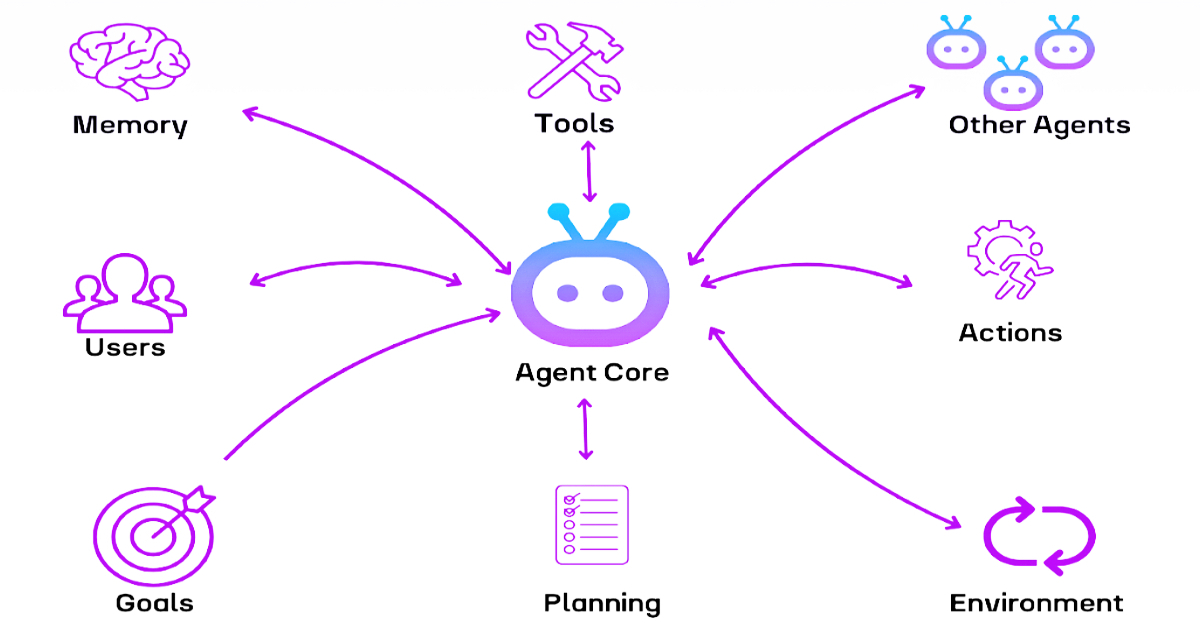

Solution: Agentic AI Workflow

- Task Decomposition & Planning (Project Manager Agent)

- Interprets high-level research goals and breaks them into structured tasks.

- Plans workflows covering literature review, hypothesis generation, simulations, and result analysis.

-

Information Gathering & Synthesis (Research Assistant Agent)

- Crawls and queries scientific databases (PubMed, arXiv, patents).

- Summarizes findings and compiles a state-of-the-art knowledge base.

-

Hypothesis Generation & Experiment Design (Scientist Agent)

- Formulates testable hypotheses.

- Designs in-silico experiments, writing and executing simulation code on cloud-based HPC infrastructure.

- Analysis, Learning, & Iteration (Lead Analyst Agent)

- Analyzes simulation results and identifies promising candidates.

- Employs agentic reasoning to refine hypotheses, optimize parameters, and rerun experiments.

-

Reporting & Recommendation (Communicator Agent)

- Generates a comprehensive scientific report detailing methods, findings, and confidence scores.

- Provides actionable insights to human researchers for lab validation.

The deployment of an Agentic AI-driven R&D platform revolutionized scientific discovery workflows. By automating the entire research pipeline—data collection, hypothesis generation, simulation, and analysis—the solution significantly accelerated drug discovery and innovation while reducing cost and human error.

Business Impact

Impact Area | Results Achieved |

Time-to-Discovery | Reduced early-stage R&D timelines from years to weeks or days, accelerating go-to-market strategy. |

Cost Optimization | Decreased manual research and simulation costs by 40–60% through automation. |

Exploration of Novel Solutions | Identified non-obvious, high-potential compounds by overcoming human cognitive bias. |

Scalability | Enabled 24/7 autonomous research, continuously iterating on hypotheses without downtime. |

Reproducibility & Transparency | Maintained a fully documented digital record of every research step for auditing and replication. |

Innovation Enablement | Provided scientists with validated, AI-driven recommendations, freeing them to focus on creative problem-solving. |

Technical Architecture

- Core AI Techniques: Multi-agent systems, agentic reasoning, natural language processing, reinforcement learning.

- Scientific Tools: Protein structure prediction models (e.g., AlphaFold 3), molecular docking simulations.

- Infrastructure: Cloud-based HPC for large-scale simulations and analytics.

- Case Study – End-to-End Automated Supply Chain Resolution Agent

The Challenge

Global supply chains are increasingly complex and vulnerable to disruptions caused by weather events, geopolitical tensions, port congestion, transportation delays, and supplier issues. Traditional supply chain management systems are often reactive, providing alerts but requiring human intervention for problem-solving. This leads to:

- High downtime costs due to delayed shipments or factory shutdowns.

- Siloed decision-making without optimization across the entire supply chain.

- Slow response times, resulting in lost revenue and reduced customer satisfaction.

- Limited scalability as human teams cannot handle disruptions at global scale in real-time.

The goal was to build an autonomous AI-driven system capable of diagnosing, planning, and resolving supply chain disruptions end-to-end without human intervention, ensuring resilience and agility.

Scope of Project

To create an Agentic AI solution that acts as a digital supply chain manager, autonomously monitoring shipments, evaluating contingency plans, and executing resolutions in real time—while proactively communicating with all stakeholders.

sOLUTION

To create an Agentic AI solution that acts as a digital supply chain manager, autonomously monitoring shipments, evaluating contingency plans, and executing resolutions in real time—while proactively communicating with all stakeholders.

Solution: Agentic AI Supply Chain Workflow

- Continuous Monitoring & Diagnosis (Sentinel Agent)

- Constantly monitors IoT sensor data, weather forecasts, port congestion databases, ERP inventory levels, and shipping carrier updates.

- Detects disruptions (e.g., delayed shipments, material shortages, or unexpected factory downtimes).

- Strategic Planning & Evaluation (Strategist Agent)

- Generates multiple response scenarios (rerouting shipments, sourcing alternatives, rescheduling production).

- Evaluates solutions against cost, time-to-resolution, production schedules, and downstream customer impact.

- Autonomous Execution & Negotiation (Executor Agent)

- Executes the chosen plan autonomously:

- Places purchase orders with alternate suppliers.

- Reroutes shipments via logistics carriers.

- Updates ERP and production systems in real time.

- Executes the chosen plan autonomously:

- Proactive Communication & Stakeholder Management (Coordinator Agent)Notifies

- relevant teams with clear, actionable updates:

- Factory managers receive updated production timelines.

- Procurement teams receive cost approvals.

- Customers receive proactive delivery updates.

- relevant teams with clear, actionable updates:

Business Impact

The Automated Supply Chain Resolution Agent transformed traditional supply chain operations from reactive firefighting to proactive resilience management. By autonomously detecting disruptions, strategizing contingency plans, and executing actions, it empowered enterprises to maintain uninterrupted production, reduce losses, and enhance customer confidence.

Impact Area | Results Achieved |

Disruption Resolution Speed | Reduced time to resolve disruptions from days to minutes or hours, ensuring uninterrupted production. |

Cost Optimization | Lowered operational losses by 40–50% through proactive adjustments and risk-based decision-making. |

Production Continuity | Eliminated costly downtime, safeguarding millions in potential revenue loss per incident. |

Customer Satisfaction | Improved on-time delivery rates by 30–40%, driving better customer trust and retention. |

Scalability | Managed thousands of shipments and events simultaneously without increasing workforce. |

Continuous Improvement | Learned from historical disruptions, improving response quality over time. |

Technical Architecture

- AI Capabilities: Agentic reasoning, reinforcement learning, NLP-driven communication.

- Data Integration: IoT sensors, weather APIs, logistics platforms, ERP systems.

- Automation Layer: Robotic process automation (RPA) and API-based system integrations.

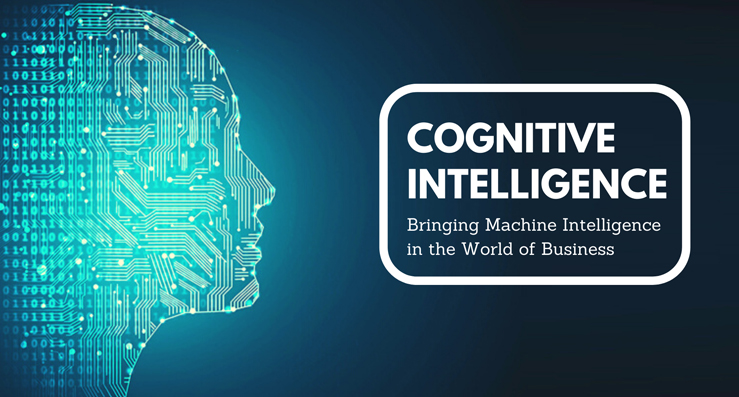

- Case Study: : Cognitive AI for Intelligent and Empathetic Customer Support

Industry : Customer Service & Technology

Challanges

- Traditional chatbots lacked emotional intelligence and context awareness, leading to frustrating user experiences.

- Resolving complex, multi-step technical issues required frequent escalation to human agents, slowing resolution times.

- High operational costs due to the need for large customer support teams to handle intricate cases.

- Lack of proactive issue detection, resulting in delayed responses to widespread problems.

- Disconnected customer experience due to limited memory of prior interactions, causing repetitive queries and reduced satisfaction.

Scope of The Project

- Deploy Cognitive AI agents capable of understanding customer intent, context, and emotion.

- Enable multi-turn, stateful conversations for resolving complex customer issues end-to-end without human intervention.

- Automate diagnostic workflows, software updates, and issue resolution through agentic reasoning.

- Continuously improve system intelligence by learning from every customer interaction.

- Build a proactive support system to detect and mitigate issues before customers escalate them.

Solution We Provided

- Implemented a Cognitive AI-powered customer support platform with the following key features:

- Advanced NLP & Emotional Intelligence: Ability to detect urgency, frustration, and intent, allowing AI agents to respond empathetically.

- Agentic Reasoning Module: AI autonomously diagnoses technical issues, executes multi-step solutions (e.g., firmware updates), and validates resolutions.

- Stateful Contextual Memory: Retains conversation history and past interactions, ensuring smooth and personalized responses.

- Continuous Learning: AI improves its knowledge base in real-time, optimizing performance and reducing repetitive issues.

- Proactive Issue Detection: The system identifies patterns in isolated cases and alerts teams about potential large-scale problems

Business Impact

- Enhanced Customer Experience:

- Provided 24/7 empathetic and hyper-personalized support, leading to a significant rise in CSAT (Customer Satisfaction) scores and brand loyalty.

- Automated 30-50% of complex support tickets, reducing dependency on human agents and cutting operational costs.

- Reduced response and resolution times significantly, improving SLAs and enabling faster troubleshooting.

- Handled growing customer volumes without increasing headcount, making support operations future-ready.

- Early detection of widespread technical issues prevented high call volumes and improved brand trust.

Technology Stack

- Natural Language Processing (NLP): Advanced parsing for sentiment and intent detection.

- Machine Learning & Cognitive AI Models: For reasoning, continuous learning, and decision-making.

- Agentic AI Frameworks: Enables autonomous execution of workflows and diagnostics.

- Cloud Infrastructure: For scalable and secure AI deployment.

- Integration Layer: APIs for connecting customer support platforms, CRM systems, and IoT device diagnostics. .

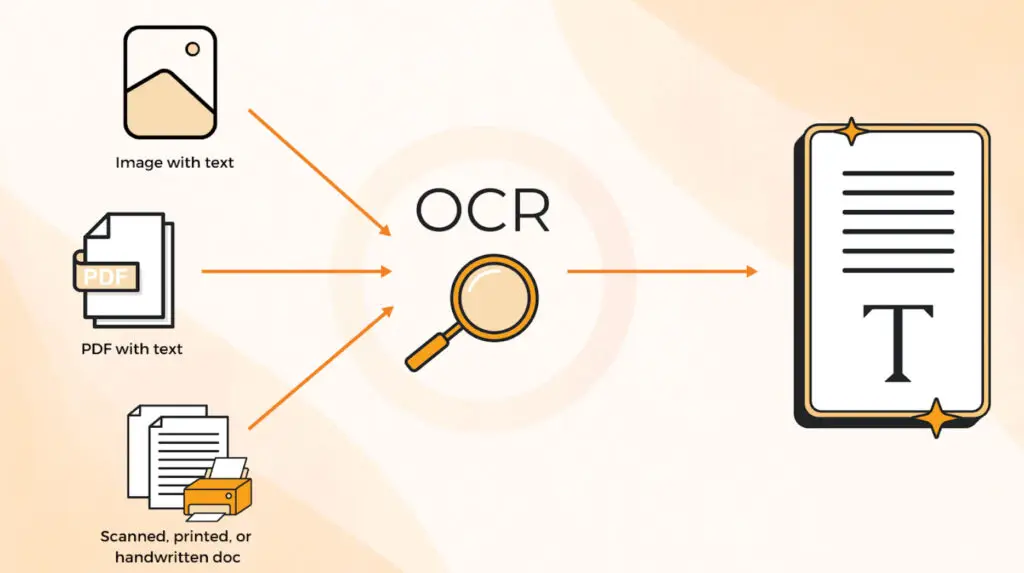

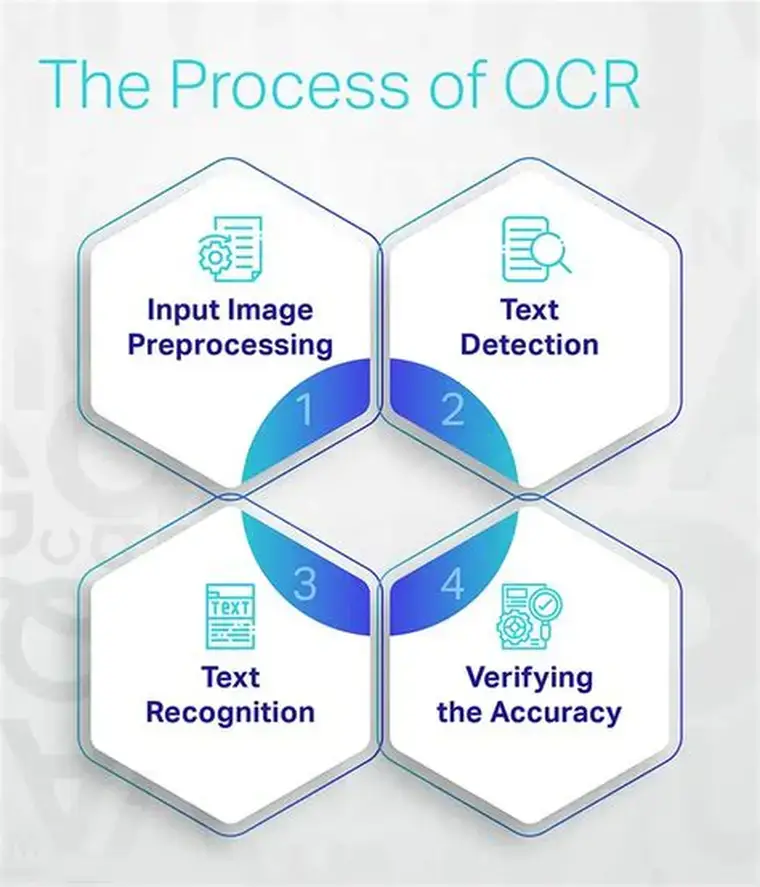

- Automating Invoice Processing with OCR

Industry

Challenges

Document Variability: Invoices from hundreds of vendors had inconsistent layouts and terminologies, making automation difficult.

Low-Quality Scans & Images: Poor-quality scanned invoices required preprocessing to achieve high OCR accuracy.

- Handwriting Recognition: Occasional handwritten fields needed additional verification workflows.

- Integration Complexity: Synchronizing extracted data with multiple ERP and procurement platforms was challenging.

- Change Management: Finance teams needed training to adapt to the automated process.

Scope of The Project

- Standardize invoice processing for multiple vendors.

- Integrate automation with ERP and accounting systems.

- Reduce processing time, errors, and costs while improving cash flow visibility.

Business Impact

| KPI | Before OCR Implementation | After OCR Implementation | Improvement |

| Processing Time per Invoice | 5-15 mins | < 30 seconds | ~90% faster |

| Error Rate | 15-20% | < 2% | ~88% reduction |

| Straight-Through Processing | <10% | 60-80% | Significant |

| Cost per Invoice | High (manual labor) | 50-70% lower | Major savings |

| Annual Labor Hours Saved | N/A | ~2,500 hours (for 50,000 invoices/year) | Operational efficiency boost |

- Faster Payment Cycles led to early payment discounts and improved supplier relationships.

- Cash Flow Predictability improved due to real-time invoice visibility.

- Finance Teams could focus on strategic work rather than data entry.

Technology Environment

Technology | Purpose |

OCR Engines (Tesseract, Roboflow OCR API) | Core text recognition |

AI Models (LayoutLMv3, GPT-4o) | Contextual understanding, multimodal field detection |

Computer Vision Object Detection | Field and table localization |

ERP/Accounting Systems (SAP, Oracle, QuickBooks, etc.) | Data integration |

APIs & Middleware | Automated workflows and validation |

Preprocessing Tools (OpenCV) | Image enhancement and quality improvement |

All the reasons to choose BIITS.

B-Informative IT Services Pvt. Ltd. (BIITS) is an award-winning Business Intelligence & Digital & Consulting company based out of Indian Silicon Valley, Bangalore. We are a team of motivated professionals with expertise in different domains and industries. We help our clients to derive simplified and conclusive data insights for effective decision making.